- genevb's home page

- Posts

- 2025

- 2024

- 2023

- 2022

- September (1)

- 2021

- 2020

- 2019

- December (1)

- October (4)

- September (2)

- August (6)

- July (1)

- June (2)

- May (4)

- April (2)

- March (3)

- February (3)

- 2018

- 2017

- December (1)

- October (3)

- September (1)

- August (1)

- July (2)

- June (2)

- April (2)

- March (2)

- February (1)

- 2016

- November (2)

- September (1)

- August (2)

- July (1)

- June (2)

- May (2)

- April (1)

- March (5)

- February (2)

- January (1)

- 2015

- December (1)

- October (1)

- September (2)

- June (1)

- May (2)

- April (2)

- March (3)

- February (1)

- January (3)

- 2014

- December (2)

- October (2)

- September (2)

- August (3)

- July (2)

- June (2)

- May (2)

- April (9)

- March (2)

- February (2)

- January (1)

- 2013

- December (5)

- October (3)

- September (3)

- August (1)

- July (1)

- May (4)

- April (4)

- March (7)

- February (1)

- January (2)

- 2012

- December (2)

- November (6)

- October (2)

- September (3)

- August (7)

- July (2)

- June (1)

- May (3)

- April (1)

- March (2)

- February (1)

- 2011

- November (1)

- October (1)

- September (4)

- August (2)

- July (4)

- June (3)

- May (4)

- April (9)

- March (5)

- February (6)

- January (3)

- 2010

- December (3)

- November (6)

- October (3)

- September (1)

- August (5)

- July (1)

- June (4)

- May (1)

- April (2)

- March (2)

- February (4)

- January (2)

- 2009

- November (1)

- October (2)

- September (6)

- August (4)

- July (4)

- June (3)

- May (5)

- April (5)

- March (3)

- February (1)

- 2008

- 2005

- October (1)

- My blog

- Post new blog entry

- All blogs

Limiting production of events with large hit counts

Introduction

As discussed at the You do not have access to view this node S&C meeting, Jerome proposed to avoid reconstruction of events which obviously have some excessive amount of hits in the TPC. Such events were commonly Some AuAu7 event images during the Au+Au 7.7 GeV operations during Run 10. To implementing this, a study was needed of the TPC occupancy in the data (total hit count, and fraction of the live TPC with hits). This page shows the results of that study.

_____________

Number of TPC Hits Per Event

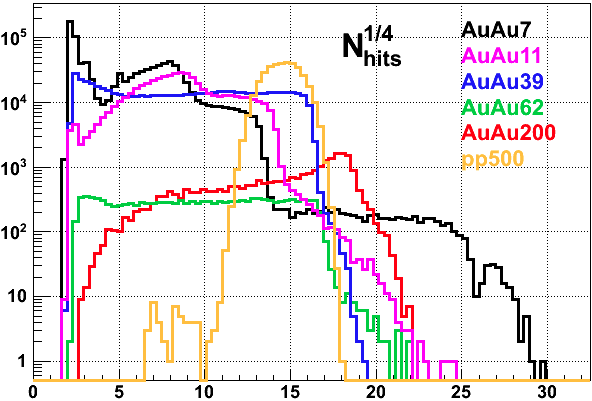

Here are some distributions of Nhits1/4 from Runs 9 & 10. This is expected to be a plateau in minimum bias AuAu collisions given that Nhits should scale with Nch. This plot is not strictly made from minimum bias data, so there are a variety of other features, but the AuAu39 and AuAu62 do demonstrate this plateau rather well. The AuAu200 is probably biased by the high tower triggers, and a high side tail is evident in some of the distributions from pile-up, and pp500 is predominantly pile-up. I do not have a good explanation for some mid-multiplicity artifacts (false [background] triggers?). The AuAu7 distribution, however, clearly shows a large contribution of high multiplicity events, as previously noted.

The above plot was made by grep-ing the number of TPC hits as reported in production log files (not just hits on tracks). There is an inherent bias in that these logs are saved only when production succeeds (it is not "hung"). This probably cuts off the upper end of at least the AuAu7 distribution, because higher hit count events may be correlated with the jobs which gut hung and do not finish in a reasonable time (the subject of RT ticket 1989).

I tested a chain which ran production without tracking, but concluded an even faster method of finding a less truncated distribution of this high count region might come from the RTS_EXAMPLE code to process DAQ files. In particular, I modified tpc_rerun to just sum the number of hits in the events and report it. This gave numbers that were on average 0.25% (a quarter of a percent) higher than what I used from the logs, and I deemed this discrepancy negligible.

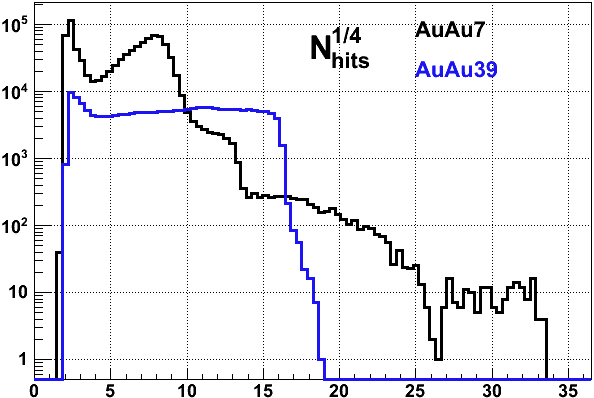

The resulting Nhits1/4 distributions from some random AuAu7 and AuAu39 DAQ files I found on disk appear as shown in the following plot. Note that the AuAu39 data looks as it did before, giving me some confidence in the result. The AuAu7 data reach to a notably higher extent, as much as 1.25M hits in just this limited sample.

_____________

Fractional Occupancy

Each TPC sector has 1750 inner + 3940 outer = 5690 pads. There are 24 sectors, so 136,560 total pads. We read out 400 time buckets, so we have 54.6M possible pixels. However, the minimum extent of a cluster is perhaps 2x3 = 6 pixels, so the theoretical maximum number of clusters is 9.1M. Reality is that those clusters need some space between each other, and other than prompt hits there should be only some noise before the gated grid opens, so this maximum is perhaps more realistically somewhere around 3-5M. Further, typical clusters are a little larger than this, so the maximum may even more realistically be only 2-4M. This means that we are considering a TPC which has possibly half of its possible total clusters!

Additionally, dead regions of the TPC should be accounted in such a fractional calculation, and this remains to be done.

_____________

pp500

Coming back to the pp500 data, the peak is near just below 15, but the upper end of the distribution reaches approximately 18. As this is only a fraction of the data, the upper limit in the full data may be near 19. It is important to recognize that RHIC projections currently forecast by 2014 an increase of x6 in the peak luminosities over that achieved in 2009. Improved acquisition of data early in the RHIC fills and stochastic cooling might help us take data closer to the peak luminosities, so it could be nearly a x10 increase in peak luminosity during data acquisition. On the Nhits1/4 plot, x10 becomes a x1.8 shift on the horizontal axis. So ~15 becomes ~27, and the upper limit on the distribution could be above 30!

This makes it all the more obvious that a strict cut for all datasets at something like 27 or 28 is not appropriate and that a different cut will be needed for different datasets. It also remains to be seen whether the topology of the hits in such high luminosity pp500 may not suffer for the occupancy in the way that these AuAu7 events do. In particular, the AuAu7 events appear to originate upstream, so tracks are at large pseudorapidity. In pp500, the tracks will be pile-up tracks from mid-rapidity with smaller dip angles, which may be handled better by the track finding codes.

_____________

Implementation

Dmitry & I set up a New table for limiting production of events with excessive TPC hits to use, and I decided on placing the hit maximum cut on hits within a sector as it is the local population of hits which is more of a concern for tracking than the full TPC (e.g. if we count 100k hits in the entire TPC, it may not be a problem, but if those 100k hits are predominantly in one sector, it would be a problem). The maximum should also be adjusted (reduced) per sector based on the live fraction of the sector (i.e. portions of the sector may be dead due to disabled RDOs and Anode Sections). It also occurred to me that an entire sector may be considered dead due to turned off anodes, yet the RDOs may still be turned on (after a trip, for example). There will be noise in the sector readout in this case, so the adjustment for dead regions must not bring the maximum number of allowed hits to zero. Instead, the reduced maximum will have a lower bound of 10% to stay above noise.

One suggestion had been to place the limit on the percentage of maximum possible TPC hits instead of an absolute number (albeit one that is reduced in specific sectors to account for dead regions). I have decided that there are too many variable factors (as discussed New table for limiting production of events with excessive TPC hits) to calculate the maximum on the fly and simply trust it year after year. Since different datasets may need a different maximum anyhow, I leave it to the experts who will fill this table to assess what is a reasonable absolute maximum.

These checks are now performed in StTpcHitMaker and StTpcRTSHitMaker, and the event is skipped if a maximum is exceeded. As of 2010-08-31, the codes, DB tables, and chosen maxima (see below) are in place in DEV. These are the codes which were changed:

StDb/idl/tpcMaxHits.idl StRoot/StDetectorDbMaker/St_tpcMaxHitsC.h StRoot/StDetectorDbMaker/StDetectorDbChairs.cxx StRoot/StTpcHitMaker/StTpcHitMaker.cxx StRoot/StTpcHitMaker/StTpcHitMaker.h StRoot/StTpcHitMaker/StTpcRTSHitMaker.cxx StRoot/StTpcHitMaker/StTpcRTSHitMaker.h StRoot/macros/bfc.C

Note that the first two files listed are new and did not previously exist in SL10h. As of 2010-08-31, none of these codes have otherwise changed since SL10h, so propagating these new versions into both SL10h and SL10i should have no other effects beyond this intended limitation of producing these events.

_____________

Choosing Maxima

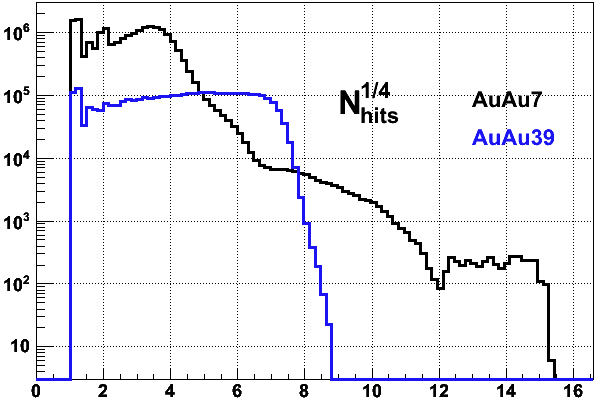

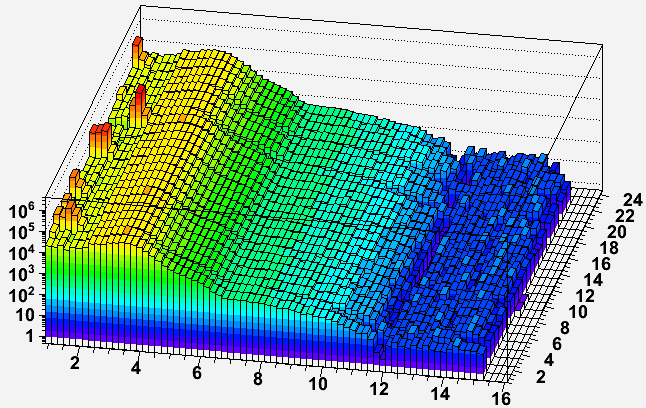

Here are some sector-wise distributions. The first is the distribution of hits in all sectors from some AuAu7 and AuAu11 data, and the second is the distribution of hits in each sector for AuAu7 data only. (As a side-note, I re-learned that I had to exclude sector 14 data from days 137-138 when it was having gated grid problems as it was responsible for a peak at 5, which one can see a little bit in the first plot on this page where I did not exclude it.) Interestingly, the sector-wise plots also show this change in behavior at 12 [(124 *24)1/4 = 26.5 on the above plots], probably indicating a different class of events above this. And no individual sectors show any gross differences in behavior from the others (with the exception of some low multiplicity effects which do not concern us here, and there are some minor differences due to sectors with some dead regions).

I have seen that a AuAu7 event with 350k hits total was enough to get the tracking hung. Dividing by 24 sectors gives about 15k per sector. Note however that a few AuAu11 jobs also got hung, and in the earlier overall-TPC-wise plots above, AuAu11 reached 254 = 390k; dividing by 24, that's 16k per sector. So we should probably set a limit below these but well above the portions of the distributions which appear to be due to physics-worthy collisions. I propose to place it at 12k hits per sector, sector-wise Nhits1/4 = ~10.5, at least for the 7 and 11 GeV datasets. For the other Run 10 datasets, this might be a bit stringent if there's acceptable pile-up, and I propose to set it at 20k hits per sector, sector-wise Nhits1/4 = ~11.9, comparable to an overall Nhits1/4 = ~26.3.

I have performed tests on st_physics_11130004_raw_2010001.daq (normally hangs on the 8th event), and st_physics_11127004_raw_2010001.daq (normally hangs on the 3745th event). Both now succeed in running to completion after skipping several events. And events both before and after the skipped event otherwise find the same tracks & vertices. I believe this is ready to be tried in production.

_____________

Aside on Sequential excessively large TPC events

_____________

-Gene

- genevb's blog

- Login or register to post comments