Operations

Welcome to the STAR Operations Home Page

Daily operations meeting at 10am on Zoom: https://bnl.zoomgov.com/j/1603605873pwd=NVk1aWs2OW5yb01rTzNtODhiaUJtQT09

(Zoom Meeting ID: 160 360 5873 Passcode: 110789)

Communications for STAR control room /shift/operation related on Zoom: https://bnl.zoomgov.com/j/1605144596?pwd=N3ExMDh3Q2txK0FxYTBVTzg4N0hHZz09

(Zoom Meeting ID: 160 514 4596 Passcode: 726787)

STAR online page: https://online.star.bnl.gov

STAR operations email list: https://lists.bnl.gov/mailman/listinfo/star-ops-l

Select the menu for information relevant to STAR Operations.

-

Operations information and checklists

- Plan for the day / Notes from Operations Meeting for Run25

- STAR Detector States for Run25 Au+Au 200 GeV collisions (Current Version 08/05/2025)

- STAR Data taking guide for Run25 (Updated 08/05/2025)

- STAR Expert Call List for Run25 (Current Version 08/05/2025)

- Checklist for recovery from power failure (07/3/2025)

- Old version: Checklist for recovering from power failure (2023)

- Detector manuals and procedures

- Trigger DAQ problems with deadtimes / crate failures DURING PHYSICS RUNS

- Reference Plots for Shift Crew

- Online Q/A Plots Manual (Jevp Plots) (updated 6/16/2020)

- Detector Operator Station Setup (Updated 12-Mar-21)

- TPC Operations

- Current DO manual

- TPC operations page

- TPC reference plots and issue problem solving (updated 7/17/2025)

- EPD (updated 7-Mar-18)

- How to check and visualize the EPD mapping from tile to QT channel (updated 8-Jul-23)

- BEMC and FCS (updated 10-Dec-21)

- BSMD (updated 06-Jun-24)

- EEMC (updated 15-Mar-25)

- EMC (updated 17-Dec-02) - this link is dead

- GMT (updated 15-Jan-19)

- FST (updated 18-Jan-22)

- sTGC (updated 27-Jan-22)

- Magnet (updated 17-Dec-02)

- Magnet Monitoring

- Offline QA

- Current RunControl/DAQ issues (updated 5/5/2021)

- Current Trigger Deadtime Issues (updated 4/8/2022)

- Run Control (updated 3/2/18)

- Slow Controls Procedures

- TOF/MTD General Instructions (updated 31-May-2025)

- TOF Gas Bottle Switchover Procedure (updated 2024 Jul 15)

- TOF Pow Cycle Procedures (Run2023), [TOF Power Cycle Procedure(Old version with Anaconda)]

- TOF LVIOC Restart Procedure

- TOF/MTD HV IOC Restart Procedure (Updated 2023)

- MTD Power Cycle Procedure (Run2022), [ MTD Power Cycle Procedure (Old version with Anaconda)]

- MTD LVIOC Restart Procedure

- Canbus Restart Procedure, [Canbus Restart Procedure (Old version with Anaconda)]

- Solve MTD (eTOF) Isobutane Fraction Alarm Instructions

- Mask TOF/MTD RDOs (updated 2025, July 24)

- eTOF General Instructions (updated 2025, March 21)

- Trigger (updated 5-Mar-13)

- BBC/ZDC/VPD LeCroy1440 Communication Problem Procedure

- V124 and STAR global timing

- C-AD Procedure for Exciting the STAR Magnet (normal user cannot connect to this)

- C-AD Procedure for Bypassing the STAR Safety Interlock Systems (normal user cannot connect to this)

- List of Authorized People for the STAR SGIS Interlock System (normal user cannot connect to this)

-

STAR Operations

- STAR Safety Review for Run19 (not a link)

- STAR FY19 Environmental emissions.doc (not a link)

- Procedures for detectors not currently available

- FPS/FPOST (updated 04-Apr-17)

- FMS UV curing procedure (updated 21-Mar-17)

- RP

- RP Slow control instructions (updated 2017-Mar-10 )

- Roman Pots Operations manual (updated 2017-Jun-24)

-

STAR Technical Support Group

Run-22 pp510 guides (SL desk printouts)

- Runs stopped by BBC/L2 (slow DMA) (updated 01/17/22) -- this is for L2 stopping the run or any trigger VME crate preventing run start.

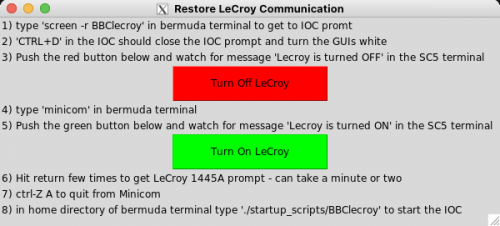

BBC/ZDC/VPD HV system (LeCroy1440) communication problem

Loss of communication with LeCroy1445A.

It often happens when LeCroy was turned off, or loses its power due to power dip.

Indications are:

- "bbchv" app on sc3 shows black on/off

- "bbchv" app doesn't update readout voltages/current

- Cannot turn on/off from "bbchv"

Solution is:

Go to SC5 computer. There should be "Restore LeCroy Communication" window:

LeCroyRestart

LeCroyRestart

Follow the instructions on the window.

If the window is not on SC5 - open a terminal and type ./scripts/restartLC.py

Make sure you have bermuda terminal open in the next monitor

(If not, open terminal, type "sys@bermuda" command. Password is the same as one in shift leader's binder for SC5 sysuser)

Controlled/Restricted Access Requests

- The period coordinator/shift leaders should have a list with controlled access requests.

- Leave your phone number if you want to be called for unscheduled controlled access.

- There are only 8 keys for controlled access.

- Always let the shift leader know when you go in and come out.

- Make a note in the elog about the work that was performed.

- Next maintenance day on Thursday, March 9, 2017 (7:30am-3:30pm).

Detector Readiness Checklist for Cosmics

prodution_pp200long2_2TOF+MTD+ETOW+BTOW+ESMD+BSMD+GMT+FPS+PP+IST+>>Feb. 27, 2018<<

Detector Readiness Checklist (Cosmic Data Taking, 2018)

1) Once Per Day

A) Reboot bdb.starp.bnl.gov (see section 3 in slow controls manual)B) Noise run for TOF/MTD pedAsPhys_tcd_only with TRG+DAQ+TOF+MTD (4M events, takes about 5-6 minutes)

C) EPD IV scan (can be in parallel with cosmics, mark run in elog)

2) Pedestals once per Shift

A) Take pedestal_tcd_only with TRG+DAQ+TPX+ITPC+ETOW+TOF+ETOF+MTD+GMT+FCS (1 event, run control will issue additional events automatically)B) Take pedestal_rhicclock_clean with TRG+DAQ (1k events)

3) Cosmic Data Taking

A) Check detector states for cosmic data takingB) Take CosmicLocalClock with TRG+DAQ+TPX+ITPC+ETOW+TOF+ETOF+MTD+GMT+L4 (30 minutes)

C) Laser runs every 4 hours (warm up in advance, 4k events)

Notes:

ETOF HV/FEE is still under expert control. In case the magnet needs to be ramped/trips, call experts!

Status of ETOF may change, check with outgoing shiftleader and elog!

Detector Readiness Checklist for current run

![]()

Detector Readiness (old)

(old - attachements hidden)

Detector States Spreadsheet

Detector States (old)

(old)

Notes from the Operations Meetings

August 9th , 2025

Zilong Chang

Running 200 GeV Au+Au

RHIC schedule:

· Saturday to Sunday: Physics

STAR status:

· Day 220: 15 hours; MB: 95 M events; BHT3 luminosity: 0.16 1/nb

· “RECONFIG ERR-fcs09” still happened. Auto power-cycling FCS not working? Shift crew should stop the run, power cycle FCS FEE, and start a new run.

· L4 was back. We may need change the physical node in the future

· Lasers seem weak overnight, Alexei is looking at it in the morning

· Three red EEMC HV tiles, made notes in the shift log and QA plots seem fine

· TOF RDO1 is masked (~ 25%). Fix: i) reload the firmware, magnet down for 1 hour, Tim, priority in case of opportunities; we may request ~2h access, if possible; ii) replace THUB, 4-6 hours, two-person job

· Masked out iTPC RDOs: S08-1, S12-2, S20-1, (S02-3, S04-4, S14-1, S16-2)

STAR plan:

· First 4 hours of high lumi (ZDC ~ 100kHz), and 2 hours of low lumi (1mrad crossing angle and ZDC ~ 13kHz ) at Full field

· HiLumi:

o First 3 hours: DAQ rate should be ~800Hz, detector dead time < ~7%

o 3rd to 4th hour: DAQ rate should be ~1.7 kHz, detector dead time < ~20%

· LoLumi:

o DAQ rate should be ~4kHz, detector dead time < ~50%

August 8th , 2025

Zilong Chang

Running 200 GeV Au+Au

RHIC schedule:

· Friday: Physics, 1 hour access after the current store for RF cavity issue, anyone interested?

· Saturday to Sunday: Physics with quick turnaround times ( ~ 38 minutes)

STAR status:

· Day 219: 18 hours; MB: 65 M events; BHT3 luminosity: 0.25 1/nb

· L4 was taken out of the run? L4 evp crashed, to reboot in person, need Wayne for help

· RECONFIG ERR-fcs09 happened multiple times, related to FCS: DEP09:8 failure, auto power cycle not working? Tonko will check

· EQ1_QTD, EQ2_QTD, EQ4_QTD configuration errors prevented taking data, Jeff, Akio and Wayne zoomed in and finally solved the problem. Network switch got fixed.

· FST was excluded during the “pedestal_tcd_only” run, 26219072. Not a big deal

· EQ4 power supply air temperature is in red (~ 46 C), continue monitoring

· MIX DSM2 took a longer time to come back after power cycling, to be looked at, nontrivial, continue to investigate, updates until next Tuesday.

· TOF RDO1 is masked (~ 25%). Fix: i) reload the firmware, magnet down for 1 hour, Tim, priority in case of opportunities; we may request ~2h access, if possible; ii) replace THUB, 4-6 hours, two-person job

· Masked out iTPC RDOs: S02-3, (S04-4, S14-1, S16-2)

STAR plan:

· First 4 hours of high lumi (ZDC ~ 100kHz), and 2 hours of low lumi (1mrad crossing angle and ZDC ~ 13kHz ) at Full field

· HiLumi:

o First 3 hours: DAQ rate should be ~800Hz, detector dead time < ~7%

o 3rd to 4th hour: DAQ rate should be ~1.7 kHz, detector dead time < ~20%

· LoLumi:

o DAQ rate should be ~4kHz, detector dead time < ~50%

August 7th , 2025

Zilong Chang

Running 200 GeV Au+Au

RHIC schedule:

• Wednesday: APEX, back at Physics just before midnight

• Thursday to Sunday: Physics

STAR status:

• Day 218: 3.4 hours; MB: 24 M events (average rate ~1.9 kHz); BHT3 luminosity: 0.04 1/nb

• All three BHT thresholds were raised. Dimuon triggers rate set around 350 Hz in the high lumi configuration at the cost of 2-fold reduction in BHT2 rates (~300 Hz), total rate still around 800 Hz

• Magnet trim west trip around 11 am yesterday, ramped back to Full in the afternoon

• New masked out iTPC RDOs: S04-4, (S14-1, S16-2)

• TOF RDO1 is masked (~ 25%). Fix: i) reload the firmware, magnet down for 1 hour, Tim, priority in case of opportunities; we may request ~2h access, if possible; ii) replace THUB, 4-6 hours, two-person job

• Possible trigger issues leading to high BHT3 rates, HT bits from input chn2 of BC106 floating high, if rebooting or full reconfigure don’t fix it, power cycle BCE and BC1 crates.

• MIX DSM2 took a longer time to come back after power cycling, to be looked at, nontrivial, continue to investigate

• EPD max TAC, 1/7 not working, further investigation, disappeared, Done

• ETOF HV fixed by the HV crate power cycle and HV IOC restart, Done

• If TOF errors message asking for power cycling, shift crew shouldn’t have taken out TOF in the run, because TOF is an important trigger detector. Instead shift crew should stop the run, power cycle and start a new run

STAR plan:

• First 4 hours of high lumi (ZDC ~ 100kHz), and 2 hours of low lumi (1mrad crossing angle and ZDC ~ 13kHz ) at Full field

• HiLumi:

o First 3 hours: DAQ rate should be ~800Hz, detector dead time < ~7%

o 3rd to 4th hour: DAQ rate should be ~1.7 kHz, detector dead time < ~20%

• LoLumi:

o DAQ rate should be ~4kHz, detector dead time < ~50%

August 6th , 2025

Zilong Chang

Running 200 GeV Au+Au

RHIC schedule:

· Tuesday, RF cavity repairs, but more troubles overnight

· Wednesday: APEX, then Physics

· Thursday to Sunday: Physics

STAR status:

· APEX mode since 8 am. Day 217: 14 hours; MB: 27.8 M events; BHT3 luminosity: 0.22 1/nb

· Overnight multiple issues kept from data taking, L2 stopping the run (power cycled BCE, EQ4, MIX and L0L1 crates), Trigger 100% dead due to sTGC (power cycled sTGC LV) and high BHT3 rates (twice during the day, likely trigger issue, not due to hot towers nor rebooting BTOW FE working, looking at the trigger data to investigate). ETOW crate 1 errors (fixed by DO)

· MIX DSM2 took a longer time to come back after power cycling, to be looked at

· Global interlock alarm, “Detector HSSD malfunction”, called Prashanth and reported to CAS

· High dead time due to BTOW, TP 260 and 261 masked out besides the trigger rebooting issue mentioned above

· L4 is back, multiple reboots for L401 note and no changes in configuration or hardware

· TOF RDO1 is masked (~ 25%). Fix: i) reload the firmware, magnet down for 1 hour, Tim, priority in case of opportunities; we may request ~2h access if possible, ii) replace THUB, 4-6 hours, two-person job

· EPD max TAC, 1/7 not working, further investigation

· New mask out iTPC RDOs: S14-1, S16-2

· ETOF HV off (not included in the run), wait for the next maintenance day

STAR plan:

· First 4 hours of high lumi (ZDC ~ 100kHz), and 2 hours of low lumi (1mrad crossing angle and ZDC ~ 13kHz ) at Full field

· HiLumi:

o First 3 hours: DAQ rate should be ~800Hz, detector dead time < ~7%

o 3rd to 4th hour: DAQ rate should be ~1.7 kHz, detector dead time < ~20%

· LoLumi:

o DAQ rate should be ~4kHz, detector dead time < ~50%

STAR daily operation meeting 08/5/2025

PC: Kong Tu -> Zilong Chang

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field. Storage cavity issues resolved. STAR luminosity got improved by fine-adjusting the residue crossing angle, since yesterday.

Plan for this week,

· Physics (until Tuesday)

· APEX (Wednesday)

· Back to Physics.

§ STAR status

· TOF RDO1 is masked. Geary was informed and still not resolved yet. Geary: THUB northwest would not configure. Fix: i) reload the firmware, needs Tim and magnet down for 1h; ii) replace THUB, which needs 4-6 hours.

· L4 is not running (L4-01, machine offline), Diyu has been looking into it. Wayne is fixing it.

· Tonko repaired some RDOs and unmasked them.

· New mask out RDOs: itpc, sector 14, rdo 1.

· Hank : 1/7 of EPD, max TAC, issue moved from one board to another, which indicated its not a hardware issue. Will continue investigating.

§ Plans:

§ running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

STAR daily operation meeting 08/4/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field. Storage cavity issues remain, 4 hours of access this afternoon.

Plan for this week,

· Physics (until Tuesday)

· APEX (Wednesday)

· Back to Physics.

§ STAR status

· Long low-lumi run yesterday during the summer Sunday.

· Smooth running in the past 24h.

· A few minor issues but were recovered by the shift.

· Reach out to Tonko to see if he needs to look into the RDO repair.

· Jeff will look into the VPD vertices. Will send out an email to the trigger board.

· Run cosmics while down if Tonko is not doing anything.

§ Plans:

· Anyone needs access this afternoon? Let us know.

§ running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

STAR daily operation meeting 08/3/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field. Storage cavity issues remain, having trouble leveling.

Plan for this week,

· Physics (until next Monday/Tuesday, 08.04 is Summer Sunday, one store from 8am to 5pm. STAR will run the default mode, i.e., switch to low lumi after 4h and continue until it ends.)

§ STAR status

· Smooth running in the past 24h.

· EEMC-DC issue is fixed by powercycling the crate (#91) by David.

· Some TPX/iTPC sector was masked out, see shiftlog. (TPX RDO 5 sector 1, iTPC RDO 3 sector 15.)

§ Plans:

§ running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

STAR daily operation meeting 08/2/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field. Accelerator had a lot of issues, last store leveled at 65kHz. (Cavity failure.)

Plan for this week,

· Physics (until next Monday/Tuesday, 08.04 is Summer Sunday, one store from 8am to 5pm.)

§ STAR status

· MTD HV issue fixed. 10 mins access yesterday evening, Power supply for the MTD SY4527 Caen was replaced by David.

· TOF/MTD DAG issue was resolved.

· ETOW warning/issue. Will Jacob sent an email about the possible cause and fix. Experts were informed. Tonko: EEMC-DC is dead. Fix: powercycle the crate (#91), which can be done from slow control (it should be done between fills ~ 5 mins.)

· Shiftcrew didn’t see the iTPC auto-recoveries for 7 mins. A section of tpc (section 14 RDO 5) was empty seen from fast offline. Tonko already was informed.

· Trigger communication issue yesterday evening, but was fixed after experts helped. Akio: shiftcrew followed instructions but couldn’t resolve by themselves after the power dip. Another issue, EQ4 issue and powercycle the VME crate but it took the shiftcrew 30 mins. Could’ve called earlier.

· Hank: 1/7 of EPD EAST not communicating for the trigger. Update: not critical and needs access. Only affects EPD TAC triggers.

§ Plans:

§ running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

§ Summer Sunday, anything to note from operation side? Only in the assembly hall. Will have a long store.

STAR daily operation meeting 08/1/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field.

Plan for this week,

· Physics (until next Monday/Tuesday, 08.04 is Summer Sunday, one store from 8am to 5pm.)

§ STAR status

· MTD/TOF issue. Still not resolved that the DAQ data are not physics events. Experts, Geary will take a look.

· STGC had a lot of trips; Prashanth already provided instructions. Will keep it at low voltage. Good for now.

· Hank: 1/7 of EPD EAST not communicating for the trigger.

§ Plans:

§ running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

§ Summer Sunday, anything to note from operation side? Only in the assembly hall. Will have a long store.

STAR daily operation meeting 07/31/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field. Some trouble injecting last night.

Plan for this week,

· Physics (until next Monday/Tuesday, 08.04 is Summer Sunday)

§ STAR status

· The maintenance day was a success; all scheduled works were completed. Thanks to all the experts. Tonko: one issue remains. FCS network switch is still not responding. Wayne is needed.

· Oleg: access was much longer than CAD anticipated. Cut our work time. They need to better at their communication.

· ETOW had an issue, expert helped the shiftcrew to bring it back early this morning.

· TRG was stuck and lost communication just now around 8:30am. Wayne, after powercycled the network switches twice, got it fixed. However, we need to be prepared for network switch replacement in case it completely dies.

· Tim couldn’t reproduce the problem in the lab for the board that was replaced, which is still confusing. Oleg: shift needs to follow the daq message. Rebooting will not help. If after a few attempts to restart the runs fails, they should call expert. Jeff: at least try to put that in the shift log if they attempted a few times and then reboot all.

· Hank: 1/7 of EPD EAST not communicating for the trigger.

· Mouse was caught, so I heard.

§ Plans:

§ running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

§ Summer Sunday, anything to note from operation side? Only in the assembly hall. Will have a long store.

STAR daily operation meeting 07/30/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field.

Plan for this week,

· Maintenance (today)

· Physics (1:30pm sweep, 2pm today to next Monday, 08.04 is Summer Sunday)

§ STAR status

· No major issue over the past 24 hours.

· Daq rate of about 2500 Hz, but reboot and restart run solved it. Issue related to BTOW.

· BTOW carte # 8 failed very often. Oleg: one board needs to be replaced. Tim is already here working on it.

· EPD eq3, may have to change board. Need Tim as well.

· Access began around ~7 am. STAR magnet at zero field

§ Plans

· Wednesday, plan and coordination:

· Alexi: job on east laser. In addition, look into trip channels.

· Tonko needs to work on a few things - RDO maintenance, MTD fiber.

· Prashanth will call Tim. (and will report back to STAR-ops)

· Souvik: FST cooling inspection. Prashanth/Alexi will help.

· Tim is needed for the following:

(1) MTD fiber issue;

(2) BSMD

(3) FCS network power switch. Wayne needs to be notified too.

· Oleg needs to work on BSMD board.

· In parallel: EIC work.

· Evgeny, Andri, Thomas (IO), 3 hours max on the diamond detector.

· Weibin: cable connection on insert calorimeter prototype. 2-3 hours.

STAR daily operation meeting 07/29/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field.

· Weather is going to be hot for today and tomorrow.

Plan for this week,

· Physics (today, Thursday to next Monday, 08.04 is Summer Sunday)

· Maintenance (Wednesday)

§ STAR status

· No major issue over the past 24 hours. Very short half-field running yesterday. Half-field running for today may start soon.

· Mouse issue still needs to be solved.

· MTD fiber 1 issue was still NOT fixed yet.

§ Plans

· Wednesday, plan and coordination:

· Drop to half-field. Maintenance starts early~ 6 am. (update after Time meeting.)

· Alexi: job on east laser. In addition, look into trip channels.

· Tonko needs to work on a few things - RDO maintenance, MTD fiber.

· Prashanth will call Tim. (and will report back to STAR-ops)

· Souvik: FST cooling inspection. Prashanth/Alexi will help.

· Tim is needed for the following:

(1) MTD fiber issue;

(2) BSMD

(3) FCS network power switch. Wayne needs to be notified too.

· Oleg needs to work on BSMD board.

· In parallel: EIC work.

· Evgeny, Andri, Thomas (IO), 3 hours max on the diamond detector.

· Weibin: cable connection on insert calorimeter prototype. 2-3 hours.

STAR daily operation meeting 07/28/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field.

· Weather is going to be hot for 3 days.

Plan for this week,

· Physics (today and tomorrow)

· Maintenance (Wednesday)

§ STAR status

· No major issue over the weekend. Caught up our luminosity.

· 10 min access Sunday morning. Deep powercycle the DEP crate. Tonko: fcal-208v is still not responding so the issue is either a) broken fcal-208v; b) upstream e.g. the ethernet switch which provides its network. However, the DEP crate powercycle helped. We need to fix it because we don’t want to go in and powercycle everytime. [Tim and Wayne should look into this.]

· JH: ZDC rates for the low lumi fluctuating was due to the leveling. Now seems to be understood and fixed.

· Mouse issue still needs to be solved.

· MTD fiber 1 issue was still NOT fixed yet. (Wednesday, plan?)

§ Plans

· Wednesday, plan:

· Drop (to half of) the field as soon as possible ~ 8am.

· Tonko needs to work on a few things.

· Oleg needs to work on something - BSMD board.

· Tim and Wayne are needed.

· Running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

STAR daily operation meeting 07/27/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field. This store is going to be extended.

Plan for this week,

· Physics (until Monday)

§ STAR status

· Three mouses were found/spotted in control room last night.

· Shiftlog was down but brought back quickly.

· sTGC plane 3 upper right sector blank, Prashanth had given instructions what to try;

· FCS, lose control its LV power. Need to powercycle the crate. Short access? We should first powercycle the network switch, which needs Wayne and no access needed. Reach out Wayne and ask for 10 mins access.

· Observation: low lumi running, event rates fluctuating with 10% up and down. Last for 1.5 hours and stop. Maybe related to booster main magnet PS

· MTD fiber 1 issue was still NOT fixed yet.

§ Plans

· Running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

STAR daily operation meeting 07/26/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· First 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running at full field

Plan for this week,

· Physics (until Monday)

§ STAR status

· We had some half-field running yesterday afternoon, due to the high temperature. Then thunderstorm came around 5pm.

· Power dip and lost power on the platform. Shiftcrew and a few experts performed full recovery procedure. Took about 3-4 hours.

· A few accesses from Oleg trying to fix the BTOW issue before the large power dip but failed; however, the large power dip seemed to fix the issue, as found later. Oleg: were there Pedestal runs, check with Shiftcrew. Hank: yes, there were.

· Smooth (almost) running overnight, with a few sTGC, TPC anode trips (shiftcrew lowered voltages) and L1 issue. Most were resolved. Alexi: high intensity beam, a lot of trips. Lower the voltages is the approach. Laser: 2nd run of a new store should be laser but was not always followed.

· Jeff: the L1 issue last night was unusual, but powercycled the L1 crate did fix the issue.

· MTD fiber 1 issue was NOT fixed yet.

§ Plans

· Running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

STAR daily operation meeting 07/25/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· Back to: first 4h of 100kHz (STAR) for high lumi, and 2h of low-lumi (1mrad crossing angle and 13kHz) running

· High temperature today. (highest 91F) Magnet half field @ 11am. Thunderstorm possible this evening.

Plan for this week,

· Physics (until Monday)

§ STAR status

· MIX issue was fixed during the access we asked for. Thanks to experts and their teamwork.

· MTD issue was NOT fixed. Need more time and thinking. No wifi in the building didn’t help (did we contact ITD?). Although it shows as the same symptom as last week, it could be something else. Next access is Wednesday - maintenance day. Need more than 10 mins and likely with Tim.

· Just now: some TPX issues (TPX[28]) (possibly the PC or its PS needs swapping), Tonko will, if not already did, contact Wayne and Jeff. Wayne will take a look this morning.

· sTGC plane 1 channel 4 trips. Prashanth has worked on it and seems fine now.

· Jeff: the issue is related to the TPX[27]. Will talk to the shiftcrew.

· BTOW crate 24 is having issues but data looks fine, Oleg will take a look.

· ETOW crate 2 issue.

§ Plans

· Running plan until further notice:

· HiLumi (first ~3hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 1 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

STAR daily operation meeting 07/24/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· APEX ended earlier yesterday around 5pm.

· 100kHz (STAR) for high lumi, and no low-lumi (1mrad crossing angle and 13kHz) running

· Last fill was good.

Plan for this week,

· Physics (until Monday)

§ STAR status

· Laser camera 3 is back, seems ok now. (Alexi thinks its shiftcrew’s inexperienced.)

· Quite some rebooting due to TPX deadtime last night. TPX RDO 5&6 were masked out which should not. Two hours of data was lost. Should ask the shiftcrew to document!! Jeff will take a look.

· EVB[6] is out, experts should look into it. Jeff: hardware problem, will/can stay out for now.

· MTD fiber 1 is now masked. (Shiftcrew originally didn’t know how to mask, and Tonko helped)

· TOF/VPD HV mysteriously turned off (?) and shiftcrew had to turn back on.

· ZDC/SMD still has issues (high ADC in low-lumi); trigger biased? But probably not an issue.

· MIX issue is still not working. Need access. See below.

§ Plans

· Fixing MIX crate:

· Akio is coordinating among Tim, Wayne, Chris, and others. The following plan pending communication with Tim.

(1) Tim prepare a spare DSM2 + computer to connect to the serial port

(2) Ask for 30min access

(3) Move dsm2-20 to different channel on the switch

(3.5) Disconnect dsm2-20 to see nothing else is using its IP address

(4) If that does not solve the issue, check IP address of the board via serial port

(5) If that does not solve the issue, replace TF003

(7) Chirs install TOF firmware

(8) Exit WAH & end access

(9) Update Tier1 file (Chris...or Eleanor)

· When we have access:

(1) MTD fiber issue can be fixed together. (Alexi, Prashanth, Tonko)

(2) Weibin needs 3 mins inside.

· Running plan until further notice:

· HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

STAR daily operation meeting 07/23/2025

§ RHIC Schedule

Physics AuAu 200 GeV

· 100kHz (STAR) for high lumi, 13kHz with 1mrad crossing angle for the low lumi

· Last night’s beam/lumi condition is bumpy, CAD said they are looking into it.

Plan for this week,

· APEX (Wed day shift.)

· Physics (Wed evening to next week)

§ STAR status

· MIX crate not working. Experts took an initial look, seems like access is needed (?). Chris suggested to powercycle the network switch first and then see. Reach out to Wayne for the powercycle. Access of 1h might be enough if the powercycle doesn’t work.

· Laser camera 3 is very dim. Wait until the Alexi comes back

§ Plans

· Running plan:

· HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

· HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

· LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Period Coordinator: Ashik Ikbal Sheikh

Tuesday, July 22:

Period Coordinator: Ashik Ikbal Sheikh

Incoming Period Coordinator: Kong Tu

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Had continuous Physics stores yesterday. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for today. APEX (third) is scheduled tomorrow.

STAR requests: high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• RDO failure – iTPC S3:2 was masked out

• BTOW configuration failure causing iTPC and TPX 100% dead. Oleg rebooted the BEMC crates and solved this at that moment. We missed the beam unless it got fixed. Jeff will investigate this further.

Notes:

The shift crew should watch out all the plots and along with the BEMC hot towers that caused the issue last night.

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Monday, July 21:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Had continuous Physics stores yesterday. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for the day -- though there will be a very short access today.

STAR requests:

high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• RDO failure – iTPC RDO S7:3 was masked out

• A mouse was spotted over this weekend – Maintenance people are supposed to put a trap! Don’t know the status!!

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs. If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure: https://drupal.star.bnl.gov/STAR/public/operations

Sunday, July 20:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Had continuous Physics stores yesterday. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for today.

STAR requests: high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• BEMC trigger patch 229 was running hot – Masked out

• RDO failure: iTPC RDO S6:2 & S4:4 are masked out

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs. If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure. https://drupal.star.bnl.gov/STAR/public/operations

Saturday, July 19:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Had continuous Physics stores yesterday. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for the day.

STAR requests:

high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• RECONFIG ERRs for TPX20 and TPX34 still persist– Tonko sent out the instructions which should be followed

• Alexei and Prashanth swapped MTD – Its working fine now

• A mouse was spotted yesterday – Need to install a trap

Notes:

Maintenance department will put a mouse trap

Discussion: Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs. If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure. https://drupal.star.bnl.gov/STAR/public/operations

Friday, July 18:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Yesterday, took vernier scan at half field. Got Physics stores after that -- Half field production for a while. Got back magnet to full field around 9pm and then collected AuAu200 GeV full field -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi.

STAR requests:

high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

With Physics stores and magnet in full field, running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• RECONFIG ERR -- TPX FEE sector 20:3&4, 20:4&5 and TPX34 were powered off and removed from subsystem tree – Tonko is looking into this

• MTD was causing the run to stop -- issue was related to Fiber 1 – Masked out and running with half MTD

• TPC anode trip does not fire the alarm – David will investigate it

• The alarm limit on STGC Gas PT-4 (yellow 1.3 -> 4, red 1.6 -> 5) was increased. Shift crews should call David Tlusty if it even touches the yellow alarm (any hour)

Notes:

We need access (~20 mins) related to MTD -- Going to happen around 10:30am.

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs. If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure. https://drupal.star.bnl.gov/STAR/public/operations

Thursday, July 17:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Yesterday, entire day was for maintenance/access. Got Physics stores early this morning and collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Maintenance/access is scheduled for today.

STAR requests:

high-lumi and low-lumi operation mode (6 hours fill)

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

STAR magnet got tripped when ramping up (due to over temperature) during the access. We got back the magnet in full field well ahead of the physics stores. Collected daily pedestals and cosmics.

With Physics stores, running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• No extraordinary issues

Plans today:

• Vernier scan is scheduled today

• We should take run with vernier scan configuration

Notes:

Flemming is updating the TPC related manual. We expect it soon.

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs. If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure. https://drupal.star.bnl.gov/STAR/public/operations

Wednesday, July 16:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF STAR operation meeting is still daily

RHIC Schedule:

Continuous Physics stores. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Maintenance/access is scheduled for today.

STAR requests: high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Maintenance is already started from 8am – Magnet is ramped down (servicing the power supply and more?)

Issues:

• RDO failure -- iTPC S11:3 is masked out

Plans during access:

• Tonko is working on the TPC

• Aihong is giving the tour today at 1pm

• STGC gas tunning by Prashanth

Notes:

Vernier scan tomorrow at lower lumi (~1.2 kHz)

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs. If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure. https://drupal.star.bnl.gov/STAR/public/operations

Tuesday, July 15:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Yesterday, for long time there was no beam – sPHENIX magnet got tripped around 12 noon. Collected cosmics during that time. Physics beam was available at 7pm.

We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for the day.

STAR requests:

high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• No serious issues to be mentioned

Plans for tomorrow:

• Tomorrow there will be access

• A tour is to be scheduled by Aihong

• Alexi: Laser for like 30 mins

• Tonko: Will go through all the lost RDOs

• BSMD might be checked by experts

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs. If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure. https://drupal.star.bnl.gov/STAR/public/operations

Monday, July 14:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Continuous Physics stores. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for the day.

STAR requests: high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• A couple of RDO failures -- iTPC S20:4 and iTPC S13:4 are masked out

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs. If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure. https://drupal.star.bnl.gov/STAR/public/operations

Sunday, July 13:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Continuous Physics stores. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for the day.

STAR requests: high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi (last 2hrs): DAQ rate should be ~4kHz, deadtime~<50%

Issues:

· A couple of RDO failures -- iTPC S23:3 is masked out

· Magnet got tripped due to power dip at 6am – got fixed in an hour

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Saturday, July 12:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Continuous Physics stores are available from yesterday. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for the day.

STAR requests: high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hourslow-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi (last 2hrs): DAQ rate should be ~4kHz, deadtime~<50%

Issues:

· A couple of RDO failures -- iTPC S08:1 & iTPC S11:02 are masked out

· TOF LV had issues and ETOW GUI turned white – DOs fixed it

· Laser camera was black – DOs power cycled and fixed it

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Friday, July 11:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Yesterday they had 56MHz beam development for a while.Then Physics stores were available. We collected AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on & 13k (1mrad crossing angle) low-lumi. Physics stores are scheduled for the day.

STAR requests: high-lumi and low-lumi operation mode (6 hours fill):

high-lumi: no crossing angle 100kHz leveling, first 4 hours

low-lumi: 1mrad crossing angle and 13kHz leveling, last 2 hrs

STAR Status:

Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi (last 2hrs): DAQ rate should be ~4kHz, deadtime~<50%

Issues:

· Frequent RDO failure needed to power cycle and caused to stopping the run

· RDO iTPC S17:2 is masked out

· Building manager sent someone this morning to work on the water leaks from the roof

Notes:

Turning off TOF LV – when happens notify Alexei

Next Wednesday during access – there will be a tour

BBC high voltage issues – follow the procedure from manual to fix it and inform Akio

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Thursday, July 10:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

Yesterday entire day was APEX (~15 hrs).

The Physics stores are scheduled for today.

AuAu200 GeV -- Starting from leveling at 100kHz for HiLumi; vertical and longitudinal cooling on.

STAR requests: high-lumi and low-lumi operation mode (6 hours fill):

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi (last 2hrs): DAQ rate should be ~4kHz, deadtime~<50%

STAR Status:

Yesterday the whole day, the STAR magnet was down. After continuous efforts from CAS, the magnet issues got resolved around 12 midnight. We got back to production around 4:20 am.

Current status: Running with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice.

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

• eTOF HV unused sectors were shown to be off (blue) at StandBy status.

DOs powercycled the eTOF HV but didn't solve this problem. Experts are informed.

• Building manager sent someone this morning to work on the water leaks from the roof

Discussion:

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Wednesday, July 09:

Period Coordinator: Ashik Ikbal Sheikh

9AM CAD daily meetings: MWF

STAR operation meeting is still daily.

RHIC Schedule:

APEX (~15 hrs) is scheduled for today.

And AuAu200 GeV after APEX as per the plan (if magnet issues are fixed).

Starting from next leveling at 100kHz for HiLumi; vertical and longitudinal cooling on.

STAR requests to high-lumi and low-lumi operation mode (6 hours fill):

HiLumi (first ~2hrs): DAQ rate should be ~800Hz, deadtime ~<7%

HiLumi (second 2 hrs): DAQ rate should be ~1.7 kHz, deadtime ~<20%

LoLumi (last 2hrs): DAQ rate should be ~4kHz, deadtime~<50%

STAR Status:

STAR is in APEX mode. Magnet issues still persists from 9:30pm. CAS is working on this.

Issues:

• Shift leader’s desktop is very slow and sometimes non-responsive

• A couple of water leaks from the roof – possibly from the AC

Notes:

Alexei is requested to turn off the TOF FEEs during APEX.

Discussion:

• Need to discuss about vernier scan next week

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Period Coordinator: Xiaoxuan Chu

Tuesday, July 8

RHIC Schedule:

56mHz yesterday, then AuAu200 GeV, leveling at 100kHz for HiLumi, vertical and longitudinal cooling on.

Today:

Possibility of getting the tetro replaced at 1004, which may take about 8 hours.

Then physics store

9AM CAD daily meetings: MWF

STAR operation meeting is still daily

STAR Status:

Magnet temp is high, no magnet trip alarm but Prashanth called and he received the notice from CAS that one sector of the magnet has tripped, magnet is not working correctly. Detectors were put in magnet ramping down mode.

MCR just called us, they will switch to low lumi, and it will last 2hr and then they will dump the beam, they need 2hr access.

Bring magnet to half field; If we keep half field, require 13kHz leveling from the beginning of next fill

Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Detector Issues:

- Anode sector 4:5 tripped several times: lowered the voltage (1390 V to 1345 V) and raised the current (2 uA to 4 uA),

- iTPC RDOs 10:2 and 16:3 were masked out

- No trip alarm when sTGC HV Cable 28 tripped: David will help

- TOF RDOs failed for too many trays.

"tof01 CAUTION tofMain tof.C:#470 TOF: too many trays (103>5) require power cycle -- consider TOF/MTD CANBUS reset! "

"tof01 CRITICAL tofMain tof.C:#495 Too many recoveries -- POWERCYCLE TOF LV ".

Power cycle TOF LV didn't work, then reset TOF Canbus, maybe not successful, not work:

"mtdMain Too many recoveries -- POWERCYCLE MTD LV" tofMain Too many recoveries -- POWERCYCLE TOF LV"

canbus restart and MTD LV power cycle, fixed, follow the procedure from the instruction

Shifter leader: write the shift log timely and accurately

Perform calibration run today

Plan:

Vernier scan this week: will discuss with CAD, Jeff will help to put back the vernier scan trigger config

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Sunday, July 7

RHIC Schedule:

56 MHz: 900-1700, APEX now. Then AuAu200 GeV, leveling at 100kHz for HiLumi, vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF

STAR operation meeting is still daily

STAR Status:

Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

- iTPC RDO i22:4 masked out

- sTGC ROB 15: power cycle

- TOF LV too many recoveries: power cycle

- DAQ EVB error: Tonko helped to flush the files to HPSS, fixed

- Accidently turned off TPC FEES: Tonko and Alexei helped, power cycle VME 58

Shifter leader: write the shift log timely and accurately

Perform calibration run today

Plan:

Vernier scan next week

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Sunday, July 6

RHIC Schedule:

AuAu200 GeV for the long weekend. Leveling at 100kHz for HiLumi; vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF

STAR operation meeting is still daily

STAR Status:

Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

- Many BSMD auto recoveries; require restarting RDO2; power cycle VME 39 for RDO2; then we got red alarms on low currents from the associated crates: Oleg and David helped, we turned on the FEEs related to those crates from the BEMC Main Control GUI, fixed; 3 runs without BSMD, 2 runs partially affected

- ITPC: RDO S16:2, S20:2, S12:1and S03:4 masked out

- "FCS: DEP02:7 failed”: fixed by starting a new run

- Too many TOF tray recoveries: reset Canbus, fixed

- EQ4 not working: power cycle VME 84 EQ4, fixed

- GMT gas was switched

- Check laser in the trigger when changing to low-lumi

Perform calibration run today

Shift leader: write the shift log timely and confirm QA plots are good with online crew for each run

Discussion:

Figure out the cause of the platform power dip (Wed, July 3)

Vernier scan next week

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Saturday, July 5

RHIC Schedule:

AuAu200 GeV for the long weekend. Leveling at 100kHz for HiLumi; vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF

STAR operation meeting is still daily

STAR Status:

Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

- DAQ monitoring dead and L2 machine off: Jeff helped bring them back

- "l2new l2new.c:#2181 More than 2000 timeouts...” twice: https://www.star.bnl.gov/public/trg/trouble/L2_stop_run_recovery.txtand reboot trigger, fixed

- VPD error during pedestal_rhicclock_clean: Tonko helped, fixed; should be no problem we can go ahead with the pedestal_rhicclock_clean

- iTPC RDOs auto recovery failed: manually power cycle, fixed; iTPC S5-3 masked out

- sTGC HV: 3:6 tripped: cleared

- TOF gas switched; TOF tray error 23 and 32: fixed

- EPD East<ADC> has a light yellow bands, back to normal since next run

- sTGC FOB04 error: fixed

Discussion:

Figure out the cause of the platform power dip (Wed, July 3)

Vernier scan next week

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Friday, July 4

RHIC Schedule:

AuAu200 GeV for the long weekend. Leveling at 100kHz for HiLumi; vertical and longitudinal cooling on.

9AM CAD daily meetings: no mgt today

STAR operation meeting is still daily

STAR Status:

Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice: HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%; LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

- A smell like burning plastic/wiring was reported at 1430, Prashanth called CAS, we were informed to monitor the smell and not call the fire department if no smoke is seen / no fire alarm is tripped. The smell decayed on its own over the next 30 minutes or so.

- Power dip:

- Power dip and magnet trip at 7:30pm, shifters were following the checklist for power dip recovery. At that momentum, subsystems were fine.

- But at 9:10pm, CAS people came and worked in the dap room; we got gas system and water system alarms, CAS set the system into bypass mode; we lost power from the platform inside; people from fire department came and nothing obvious found.

- Experts came to STAR or joined online to get back the subsystems.

- At 10:10pm, CAS brought back the power for the platform.

- Detectors:

- We didn’t have time to perform daily calibration yesterday: need calibration runs today

- MTD RDOs auto recoveries failed/too many recoveries, and requiring TOF trays power cycle: TOF/MTD CANBUS reset

- ETOW Crate ID 1: power cycle; EEMC GUI froze: power cycle corresponding VME and “reload master”

- If TPC anode trips serval times: call Alexei first and follow the expert’s advice

Discussion:

Need to figure out the cause of the platform power dip

Vernier scan next week

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

Thursday, July 3

RHIC Schedule:

AuAu200 GeV Continued after maintenance. Starting from next leveling at 100kHz for HiLumi; vertical and longitudinal cooling on. 9AM CAD daily meetings: no mgt this Friday

STAR operation meeting is still daily

Physics for long weekend

STAR Status:

Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice; leveling to 100kHz from next fill

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

- TPC computer froze, fixed by experts: shifters should be aware of alternative solution by connecting SC5, in case it froze again

- TPC Anode S4 C5 trips: reduce voltage by 45V

- iTPC S18-4 was masked out for “db-check” error message (means PS info is not consistent): we should start the new run with this, keep it in mind, now it’s unmasked; S18-1 stays masked

- AC water leaking: will be fixed today

Discussion:

- Need to discuss about vernier scan next week

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

02 July 2025

(Period Coordinator: Xiaoxuan Chu)

Maintenance day Wednesday (July 2, today 800-1600), AuAu200 GeV Continues afterwards.

STAR Status:

Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice; Will request leveling to 100kHz from next fill

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Issues:

- ZDC TCIM wrong threshold after the power dip, ZDC rate was not reading correctly at the beginning of the fill (noon time yesterday), fixed by reloading the threshold values. Reload ZDC TCIM threshold values after the power dip – add this instruction in the checklist

- EPD: was off since the power dip, didn’t take day for two fills, GUI doesn’t show it’s off, but the online plots tell: more attention from the shifters;

SLs should confirm with the crew that QA are good for each run;

Shifters follow the checklist for the power dip;

Calibration runs should be performed properly.

- Laser PC was frozen, fixed

- ETOW crate ID error: toke out for one run and included it in the next run, fixed

- iTPC RDOs auto-recoveries

Today’s schedule:

Magnet is off now

BTOW: Oleg and Tim

EPD: Tim

TPC electronics: Tonko

FST: Prithwish

eTOF: Alexei

EIC related walkthrough

Discussion:

We will request leveling to 100kHz from the next fill (inform CAD)

Jeff will implement dimuon and BHT1 trigger adjustments:

- Low-lumi: Remove prescale 1 from and save them to st-physics

- Hi-lumi: will cut rate to forward, and upc above 85 khz

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

01 July 2025

(Period Coordinator: Xiaoxuan Chu)

RHIC Schedule:

AuAu200 GeV Continued. Intensity up to 1.95x10^11 at the level of the best stores. turn-around between fills is about 40 minutes; 100-140KHz ZDC rates, request leveling at 85kHz for HiLumi; vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF only

STAR operation meeting is still daily.

Mainly physics, maintenance day Wednesday (July 2)

STAR Status:

Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice; 85kHz leveling

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

Everything has been brought back after the power dip last night; cosmic data-taking now

- TOF: Tray 47 masked out

- FST: Failure Codes were not solved by power cycle, access requested for Emergency Restart procedure, fixed

- BTOW: RDOs and configuration failed, fixed

- EEMC: communication lost, fixed

Plans:

STAR requests to change to high-lumi and low-lumi operation mode Friday (6 hours fill):

high-lumi: no cross angle 85kHz leveling, first 4 hours

low-lumi: 1mrad cross angle and 13kHz leveling

Discussion:

Test for no leveling fill (if before 12:00 EDT): Tonko will call in and help

Prescale BHT1 into low-lumi, for high-lumi we stay with 10: Jeff

Plan for tomorrow (Wed 800-1600, maintenance):

BTOW: Oleg, we will ask Tim for help, with magnet down

TPC electronics: Tonko

FST: Prithwish

eTOF: Alexei

EIC related walkthrough

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth (if no response, down the list). Inform shift leaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

30 June 2025

(Period Coordinator: Zhangbu Xu)

(incoming period coordinator: Xiaoxuan Chu)

RHIC Schedule

RHIC status:

AuAu200 GeV Continued. Intensity up to 1.95x10^11 at the level of the best stores. turn-around between fills is about 40 minutes; 100—140KHz ZDC rates, request leveling at 85kHz for HiLumi;

vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF only

STAR operation meeting is still daily.

Mainly physics, maintenance day Wednesday (July 2)

STAR status

· Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice. Implemented Friday afternoon 06/20; 85kHz leveling;

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

If these numbers are off by a lot, something is wrong, restart run if you cannot find the root cause.

· zdc_mb 1.2B; BHT3 1/nb; (exclude zdc_mb in HiLumi and halfField)

· West poletip trip at 9:30PM. CAS people replaced the broken regulator PS.

· BTOW canbus connection down. Access at 1:30AM to power cycle (Oleg and David)

SCSERV power cycled => communication restored

BEMC back

(radstone board needed to be rebooted)

· AC water leaking: fixed?

· How can we achieve the luminosity goal?

vxWork machine login user/password, BEMCCAN

Plans

STAR requests to change to high-lumi and low-lumi operation mode Friday (6 hours fill):

high-lumi: no cross angle 85kHz leveling, first 4 hours.

low-lumi: 1mrad cross angle and 13kHz leveling

When change magnet to HalfField or from halffield back to fullField, call Prashanth.

Discussion

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth

(if no response, down the list).

inform shiftleaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

29 June 2025

(Period Coordinator: Zhangbu Xu)

RHIC Schedule

RHIC status:

AuAu200 GeV Continued. Intensity up to 1.95x10^11 at the level of the best stores. turn-around between fills is about 40 minutes; 100—140KHz ZDC rates, request leveling at 85kHz for HiLumi;

vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF only

STAR operation meeting is still daily.

Mainly physics, maintenance day next week (July 2)

STAR status

· Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice. Implemented Friday afternoon 06/20; 85kHz leveling;

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

If these numbers are off by a lot, something is wrong, restart run if you cannot find the root cause.

· zdc_mb 1.16B; BHT3 830/ub; (exclude zdc_mb in HiLumi and halfField)

· AC water leaking: fixed?

· “Master reload” fixes the issue. ETOW instruction

|

05:36:15 |

1 |

etow |

CAUTION |

etowMain |

emc.C:#846 |

ETOW: configuration failed -- watch ETOW triggers or restart run. |

Plans

STAR requests to change to high-lumi and low-lumi operation mode Friday (6 hours fill):

high-lumi: no cross angle 85kHz leveling, first 4 hours.

low-lumi: 1mrad cross angle and 13kHz leveling

When change magnet to HalfField or from halffield back to fullField, call Prashanth.

Discussion

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth

(if no response, down the list).

inform shiftleaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

28 June 2025

(Period Coordinator: Zhangbu Xu)

RHIC Schedule

RHIC status:

AuAu200 GeV Continued. Intensity up to 1.95x10^11 at the level of the best stores. turn-around between fills is about 40 minutes; 100—140KHz ZDC rates, request leveling at 85kHz for HiLumi;

vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF only

STAR operation meeting is still daily.

Mainly physics, maintenance day next week (July 2)

STAR status

· Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice. Implemented Friday afternoon 06/20; 85kHz leveling;

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

If these numbers are off by a lot, something is wrong, restart run if you can not find the root cause.

· zdc_mb 1.1B; BHT3 700/ub; (exclude zdc_mb in HiLumi and halfField)

· AC water leaking: fixed?

· TPC anode trips (lower HV by 50V), raised back to full HV, setting tripping limit to 2uA. Seven RDOs masked out. test if the critical message works (issue resolved).

Unmasked all, but need to fix some issues. Remasked 3.

Plans

STAR requests to change to high-lumi and low-lumi operation mode Friday (6 hours fill):

high-lumi: no cross angle 85kHz leveling, first 4 hours.

low-lumi: 1mrad cross angle and 13kHz leveling

When change magnet to HalfField or from halffield back to fullField, call Prashanth.

Discussion

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth

(if no response, down the list).

inform shiftleaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

27 June 2025

(Period Coordinator: Zhangbu Xu)

RHIC Schedule

RHIC status:

AuAu200 GeV Continued. Intensity up to 1.95x10^11 at the level of the best stores. turn-around between fills is about 40 minutes; 100—140KHz ZDC rates, request leveling at 85kHz for HiLumi;

vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF only

STAR operation meeting is still daily.

Mainly physics, maintenance day next week (July 2)

STAR status

· Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice. Implemented Friday afternoon 06/20; 85kHz leveling;

HiLumi: DAQ rate should be ~2kHz, deadtime ~<25%

LoLumi: DAQ rate should be ~4kHz, deadtime~<50%

If these numbers are off by a lot, something is wrong, restart run if you can not find the root cause.

· zdc_mb 107M; BHT3 600/ub; (exclude zdc_mb in HiLumi and halffield)

· AC water leaking: fixed?

· TPC anode trips (lower HV by 50V), raised back to full HV, setting tripping limit to 2uA. Three RDOs masked out. test if the critical message works.

Unmasked all, but need to fix some issues. Remasked 4.

· BTOW configuration issues this morning.

Plans

STAR requests to change to high-lumi and low-lumi operation mode Friday (6 hours fill):

high-lumi: no cross angle 85kHz leveling, first 4 hours.

low-lumi: 1mrad cross angle and 13kHz leveling

When change magnet to HalfField or from halffield back to fullField, call Prashanth.

Discussion

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth

(if no response, down the list).

inform shiftleaders about this procedure.

https://drupal.star.bnl.gov/STAR/public/operations

26 June 2025

(Period Coordinator: Zhangbu Xu)

RHIC Schedule

RHIC status:

AuAu200 GeV Continued. Intensity up to 2.2x10^11 at the level of the best stores. turn-around between fills is about 40 minutes; 100—140KHz ZDC rates, request leveling at 85kHz for HiLumi;

vertical and longitudinal cooling on.

9AM CAD daily meetings: MWF only

Mainly physics, maintenance day next week (July 2)

STAR status

· Run with high-lumi and low-lumi (4+2) dual mode. This mode for the remaining operation until further notice. Implemented Friday afternoon 06/20; 85kHz leveling next fill;

· zdc_mb 950M; BHT3 500/ub; (exclude zdc_mb in HiLumi and halffield)

· AC water leaking: fixed?

· TPC anode trips (lower HV by 50V), raised back to full HV, setting tripping limit to 2uA. Three RDOs masked out. Send Tonko critical support (still masked out).

test if the critical message works.

· TPC trip alarm handler did not go off? Single channel trip no alarm handler goes off.

Alexei: will talk to Tonko and David; FIXED

· L4 halfField tracking FIXED.

· Jamie requested two more scaler rate triggers; luminosity monitoring.

Plans

STAR requests to change to high-lumi and low-lumi operation mode Friday (6 hours fill):

high-lumi: no cross angle 85kHz leveling, first 4 hours.

low-lumi: 1mrad cross angle and 13kHz leveling

When change magnet to HalfField or from halffield back to fullField, call Prashanth.

Discussion

Thunderstorm/powerdip preventive procedure (APEX mode) when CAD informs.

If power dip occurs, call experts. If global interlock at DAQ room alarms, call Prashanth