General

This will contain general information about STAR as well as a container for diverses activities (operations, STSG, STAR management document, etc ...) all of public nature.

Collaboration

[Under work - see this link in the interim]

Acknowledgements

LATEX version (escaped special characters):

We thank the RHIC Operations Group and SDCC at BNL, the NERSC Center at LBNL, and the Open Science Grid consortium for providing resources and support. This work was supported in part by the Office of Nuclear Physics within the U.S. DOE Office of Science, the U.S. National Science Foundation, National Natural Science Foundation of China, Chinese Academy of Science, the Ministry of Science and Technology of China and the Chinese Ministry of Education, NSTC Taipei, the National Research Foundation of Korea, Czech Science Foundation and Ministry of Education, Youth and Sports of the Czech Republic, Hungarian National Research, Development and Innovation Office, New National Excellency Programme of the Hungarian Ministry of Human Capacities, Department of Atomic Energy and Department of Science and Technology of the Government of India, the National Science Centre and WUT ID-UB of Poland, the Ministry of Science, Education and Sports of the Republic of Croatia, German Bundesministerium f\"ur Bildung, Wissenschaft, Forschung and Technologie (BMBF), Helmholtz Association, Ministry of Education, Culture, Sports, Science, and Technology (MEXT), and Japan Society for the Promotion of Science (JSPS).

UNICODE version (native UTF characters):

We thank the RHIC Operations Group and SDCC at BNL, the NERSC Center at LBNL, and the Open Science Grid consortium for providing resources and support. This work was supported in part by the Office of Nuclear Physics within the U.S. DOE Office of Science, the U.S. National Science Foundation, National Natural Science Foundation of China, Chinese Academy of Science, the Ministry of Science and Technology of China and the Chinese Ministry of Education, NSTC Taipei, the National Research Foundation of Korea, Czech Science Foundation and Ministry of Education, Youth and Sports of the Czech Republic, Hungarian National Research, Development and Innovation Office, New National Excellency Programme of the Hungarian Ministry of Human Capacities, Department of Atomic Energy and Department of Science and Technology of the Government of India, the National Science Centre and WUT ID-UB of Poland, the Ministry of Science, Education and Sports of the Republic of Croatia, German Bundesministerium für Bildung, Wissenschaft, Forschung and Technologie (BMBF), Helmholtz Association, Ministry of Education, Culture, Sports, Science, and Technology (MEXT), and Japan Society for the Promotion of Science (JSPS).

STAR Funding Agencies

STAR is supported by several funding agencies in countries on four continents, in addition to significant support from STAR member institutions. Here is a list of STAR funding agencies, with links to their web pages.

|

Office of Nuclear Physics, Office of High Energy Physics, Office of Science, Department of Energy United States |

|

National Science Foundation, United States |

|

Frankfurt Institute for Advanced Studies, Germany |

|

Institut National de Physique Nucleaire et de Physique des Particules, France |

|

Fundação de Amparo à Pesquisa do Estado de São Paulo, Brazil |

| Ministry of Science and Technology, Russia |

|

|

National Natural Science Foundation, China |

|

Chinese Acadamey Of Sciences (CAS), China |

| Ministry of Science and Technology (MoST), China |

|

|

Department of Atomic Energy(DAE), India |

| Department of Science and Technology, India |

|

|

Grant Agency of Czech Republic, Czech Republic |

|

Research Council of Fundamental Science & Technology (KRCF), Korea |

|

Research Foundation, Korea |

Nominated Speakers 2019-2025

| Conference | Date | Abstracts due | Speakers(s) (talk uploaded/not uploaded/not uploaded but direct invite) | Webpage |

|

Excited QCD 2019 |

Jan 30 – Feb 3 |

|

1 talk (Jana Bielcikova) |

https://indico.cern.ch/event/720726/ |

| WWND2019 | Jan 6-12 | 11 talks (Hiroki Kato, Yota Kawamura,Shuai Yang, Pengfei Wang, Subhash Singha, Jaroslav Adam, Niseem Magdy, Saehanseul Oh, Matt Posik, Zhenzhen Yang, Takafumi Niida) | https://indico.cern.ch/event/766194/ | |

| HFM-2019 | Mar 18-20 |

1 plenary talk (Md. Nasim) |

||

|

MoriondQCD 2019 |

Mar 23-30 |

2 plenary talks ( JaroslavBielcik ZhenyuYe) |

||

|

Fairness 2019 |

May 20-24 |

1 talk (LeszekKosarzewski) |

||

|

DIS2019 |

April 8-12 | 1 talk (Petr Chaloupka) | ||

|

RHIC and AGS AUM 2019, jet workshop |

June 4-7 | 2 talks (sanhanseulOh, Kolja Kauder) |

https://www.bnl.gov/aum2019/ |

|

| RHIC and AGS AUM 2019, spin workshop | June 4-7 | 4 talks (Qian Yang, Renee Fatemi, Jinlong Zhang, Amilkar Quintero) | https://www.bnl.gov/aum2019/ | |

| RHIC and AGS 2019 | June 4-7 | 3 plenary talks (James Daniel Brandenburg, Amilkar Quintero, Irakli Chakaberia) | https://www.bnl.gov/aum2019/ | |

|

SQM2019 |

June 10-15 | 2 plenary talk (Guannan Xin, Jie Zhao) |

https://sqm2019.ba.infn.it |

|

| IS2019 | June 24-28 | 1 plenary talk (Shengli Huang) |

https://www.bnl.gov/is2019/ |

|

| quarkonium workshop 2019 | May 13-17 | 2 speakers (Qian Yang, Jaroslav Bielcik) | ||

| Hadron 2019 | August 16-21 | 1 talk (Jinhui Chen) | http://hadron2019.csp.escience.cn | |

| APS DPF 2019 | July 29-Aug 2 | 2 talks (Bill Schmidke, Niseem Magdy) | ||

| ICNFP2019 | August 21-29 | 3 talks (Ahmed Hamed, Jaroslav Bielcik, David Tlusty (plenary)) | https://indico.cern.ch/event/754973/ | |

| Pacific Spin 2019 | August 27-30 | 1 talk (Akio Ogawa) | ||

| ISMD 2019 | September 9-13 | 1 plenary talk (Qinghua Xu) | https://indico.cern.ch/event/761800 | |

| QFTHEP’2019 | September 22-29 | 1 plenary speaker (Grigory Nigmatkulov) | http://qfthep.sinp.msu.ru | |

| QM2019 | November 4-9 | 1 plenary speaker (Zhangbu Xu), 5 parallel speakers (Jie Zhao, Ashish Pandav, Hanseul Oh, Muhammad Usman, Yanfang Liu) | https://indico.cern.ch/event/792436/ | |

| RHIC and AGS open forum meeting 2019 | October 15 | 1 talk (Carl Gagliardi) | ||

| workshop on forward physics 2019 | November 18-21 | 1 talk (David Kapukchyan) | https://indico.cern.ch/event/823693/ | |

| workshop MPI at the LHC 2019 | November 18-22 | 1 talk (Jana Bielcikova) | https://indico.cern.ch/event/816226/ | |

| 2020 Santa Fe Jets and Heavy flavor workshop | February 3-5 | 1 talk (Alex Jentsch) | http://www.cvent.com/events/2020-santa-fe-jets-and-heavy-flavor-workshop/event-summary-d48f98a525a74998a367d58d8bbb4362.aspx | |

| http://moriond.in2p3.fr/2020/QCD/ | ||||

| WWND2020 | March 1-7 | 6 talks (Prithwish Tribedy, Annika Ewigleben, Daniel Brandenburg, Shengli Huang, Adam Gibson-Even, Liang Yue) | https://indico.cern.ch/event/841247/ | |

| Transversity 2020 | May 25-29 | https://urldefense.com/v3/__https://agenda.infn.it/e/transversity2020__;!!P4SdNyxKAPE!XpSPnkOPXZ2-VJ3f8-k3TCdW_b7kVIVN92Dra1rQaVN9OoIzk-JnO8BwezHr$ , postponed | ||

| nucleus 2020 | May 26-30 | 1 speaker (Alexey Aparin) | https://events.spbu.ru/events/nucleus-2020?lang=Eng postponed to Oct 11-17 2020 online | |

| CPOD2020 | May 4-8 | 2 speakers | https://indico.cern.ch/event/851194/ , postponed | |

| RHIC and AGS users meeting 2020, small system workshop | June 9 | 1 speaker (Roy Lacey) | postponed - becomes an online meeting | |

| RHIC and AGS users meeting 2020 | June 9-12 | 3 plenary speakers () | postponed - becomes an online meeting | |

| Hard Probes 2020 | June 1-5 | 1 plenary (Zaochen Yi), 2 parallel (Zhen Wang) | https://indico.cern.ch/event/751767/ , becomes a online meeting | |

| FAIR-NICA centrality flow workshop | Aug 24-28 | 2 plenary (Shinlchi Esumi, Shusu Shi) | http://indico.oris.mephi.ru/event/181/ | |

| ICNFP 2020 | Sep 4-12 | 1 plenary (Ahmed Hamed) | https://indico.cern.ch/event/868045/ | |

| Online AUM 2020 | Oct 22-23 | 2 Plenary(run report: Daniel Cebra, highlight: Raghav Kunnawalkam Elayavalli): BES Workshop: Takafumi Niida, Sam Heppelmann, Yang Wu 2 Cold QCD (future plans: Scott Wissink, highlights: Nickolas Lukow) High Pt: Isaac Mooney |

https://www.bnl.gov/aum2020/ |

|

| CPOD 2021 | Mar 15-19 | 1 plenary (Shinlchi Esumi), merged abstract: Prabhupada Dixit | https://indico.cern.ch/event/985460/ | |

| Moriond 2021 | Mar 27-Apr 3 | 2 speakers (Yu Zhang, Saehanseul Oh) | http://moriond.in2p3.fr/2021/QCD/ | |

| DIS 2021 - WG6 parallel session | Apr 12-16 | Oleg Tsai | https://www.stonybrook.edu/cfns/dis2021/ | |

| GHP 2021 | Apr 13-16 | Sooraj Radhakrishan | https://indico.jlab.org/event/412/ | |

| SQM 2021 | May 17-21 | 1) Updates on flavor production from STAR (Talk on May 17th): Sooraj Radhakrishnan

2) Recent milestones from STAR: New developments and open questions (Talk on May 17th): Rongrong Ma

3) STAR Detector Upgrades (Talk on May 22nd): Chi Yang

parallel Talks: #189: Shenghui Zhang, #196: Yan Huang, #172&191: Moe Isshiki |

https://indico.cern.ch/event/985652/ | |

| AUM 202 | June 8-21 | Plenary: Matt Kelsey, Leszek Kosarzewski |

https://www.bnl.gov/aum2021/ | |

| Nucleus 2021 | Sep 20-25 | Grigory Nigmatkulov | https://events.spbu.ru/events/nucleus-2021?lang=Eng, https://indico.cern.ch/event/1012633/ | |

| ICNFP2021 | 23 Aug-2 Sep | 1.All heavy ions: Ahmed Hamed

2.All spin physics: Amilkar Quintero

3. BES talk: Toshihiro Nonaka

4. Mini topical review, High pt & jets: Nihar Sahoo

5. Mini topical review, Heavy Flavor: Te-Chuan Huang

6. Mini topical review, FCV: Chuan-Jian Zhang

|

https://indico.cern.ch/event/1025480/page/22286-workshops-icnfp-2021 | |

| QCD-N2021 | Oct 4-8 | Salvatore Fazio | https://indico.fis.ucm.es/event/16/ | |

| 12th MPI at LHC WH5 | Oct 11-15 | Yue-Hang Leung | https://indico.lip.pt/event/688/overview (combined STAR and PHENIX Results) | |

| DNP-Special Isobar Session | Oct 11-14 | Sergei Voloshin | http://web.mit.edu/dnp2021/ | |

| WWND 2022 | Feb 27-Mar 5 | Yuanjing Ji “Hypernuclei production at STAR” Niseem Magdy "CME search with isobar collisions” Xu Sun “STAR’s Forward Upgrade Program” |

https://indico.cern.ch/event/1039540/ | |

| CPHI 2022 | Mar 7-12 | Xiaoxuan Chu | ||

| Moriond 2022 | Mar 19-26 | Tomas Truhlar | https://moriond.in2p3.fr/2022/ | |

| Quark Matter 2022 | April 4-10 | Prithwish Tribedy (Plenary)

Merge talk 1 - Yue-Hang Leung

Merge talk 2 - Aswini Kumar Sahoo

Merge talk 3 - Tong Liu

Merge talk 5 - Yu Hu

Merge talk 6 - Haojie Xu

Merge talk 7 - Ashik Ikbal

Merge talk 9 - Ke Mi

Merge talk10 - Ziyue Zhang

|

https://indico.cern.ch/event/895086/ | |

| DIS 2022 | May 2-6 | generic invitation for speakers to submit abstracts | https://indico.cern.ch/event/1072533/ | |

|

Transversity 2022 |

May 23-27 |

|

Will Jacobs |

https://agenda.infn.it/event/19219/ |

|

RHIC AUM 2022 |

June 7-10 |

|

Evan Finch, Zilong Chang, Takafumi Niida |

https://www.bnl.gov/rhicagsaum/ |

|

SQM 2022 |

June 13-17 |

|

Barbara Trzeciak (Plenary) |

https://sqm2022.pusan.ac.kr |

|

HF-WINC |

July 14-16 |

|

Sonia Kabana |

https://indico.cern.ch/event/883427/ |

|

CIPANP 2022 |

Aug 29-Sep 4 |

|

Niseem Magdy |

https://agenda.hep.wisc.edu/event/1644/ |

|

ICNFP 2022 |

Aug 30-Sep 11 |

|

Barbara Trzeciak, |

https://indico.cern.ch/event/1133591/ |

|

QNP 2022 |

Sep 5-9 |

|

Yu Hu, |

https://indico.jlab.org/event/344/ |

|

PIC 2022 |

Sep 5-9 |

Tbilisi State University |

Sonia Kabana |

https://indico.cern.ch/event/1158815/abstracts/ |

|

Nuclear Science and Technologies |

Sep 26-30 |

(Only identified who the organizers could invite - not STC selected talk) |

Grigory Nigmatkulov |

https://indico.alem.cloud/event/1/ |

|

EuNPC 2022 |

Oct 24-28 |

|

Daniel Kikola |

https://indico.cern.ch/event/1104299/ |

|

MPI 2022 |

Nov14-18 |

|

Xiaoxuan Chu

|

https://indico.ift.uam-csic.es/event/14/ |

|

CPOD 2022 |

Nov28-Dec2 |

|

Md Nasim

|

|

|

ICPAQGP-2023 |

Feb7-10 2023 |

|

Nihar Sahoo Subash Singha

|

https://events.vecc.gov.in/event/19/overview |

|

Moriond QCD |

March 25-April1 |

|

Veronica Verkest Zachary Sweger

|

https://moriond.in2p3.fr/2023/QCD/ |

|

CERN BES Seminar 2023 |

|

(Only identified who the organizers could invite - not STC selected talk) |

Yue-Hang Leung

|

|

|

Hard Probes 2023 |

March 26-31 |

|

Nihar Sahoo (Plenary Talk) Joern Putsche gave the talk on behalf of Nihar and STAR due to visa issues.

|

https://wwuindico.uni-muenster.de/event/1409/ |

|

IS2023 |

June 19-23 |

|

Nicole Lewis

|

|

|

Hadron2023 |

June 5-9 |

|

Lori Vassiliev

|

|

|

IWHSS2023 |

June 26-29 |

|

|

|

|

|

|

|

|

|

|

Lund Jet Plane 2023 |

Jul 3-7 |

|

Monika Robotkova

|

|

|

21st Lomonosov Conf |

Aug 24-30 |

|

|

|

|

QuarkMatter23 |

Sep 3-9 |

|

Rosi Reed (Plenary)

Merge talk 1 - Zuowen Liu

Merge talk 2 - Chengdong Han

Merge talk 3 - Baoshan Xi

Merge talk 4 - Xiaoyu Liu

Merge talk 5 - Aditya Prasad Dash

Merge talk 6 - Yuan Su

Merge talk 7 - Ishu Aggarwal

Merge talk 8 - Matthew Harasty

|

|

|

SPIN 2023 |

Sep 23-29 |

|

Ting Lin

|

|

|

ISMD 2023 |

Aug 21–26 |

|

1. Baryon Junctions - Zebo Tang 2. Flow - Vinh Luong 3. Jets -

|

|

| RHIC AUM 2023 | Aug 1-4 |

1. Run 23 Report - Kong Tu 2. STAR Highlight - Rongrong Ma 3. Forward Upgrade - Xilin Liang 4. Forward Tracker - Zhen Wang |

||

|

Baldin ISHEPP XXV |

Sep 18-23, 2023 |

|

STAR Overview: Artem Korobitsin

|

|

|

International Symposium on Physics in Collision (PIC 2023) |

October 10-13, 2023 |

|

1. Mini-review (15+3 min) on spin physics of STAR - Jae Nam |

|

|

CFNS Workshop |

Nov 6-9, 2023 |

|

1. Recent STAR heavy flavor and quarkonia study highlight. (15+5) - Wei Zhang 2. Recent STAR spin studies. (15+5) - Dmitry Kalinkin

|

|

|

MPI@LHC |

Nov 20-24, 2023 |

|

WG 1. STAR measurements sensitive to Hadronization and UE/MPI - Leszek Kosarzewski WG3. Measurements of azimuthal anisotropy in small systems at RHIC (summary) -

|

|

|

UPC International Workshop |

Dec 11-15, 2023 |

|

1. An overview talk on the latest UPC results. - David Tlusty 2. Two-photon production of dilepton pairs in UPC (Session 2). - Wangmei Zha 3. Two-photon production of dilepton pairs in events with nuclear overlap (Session 5). - Zhang Li |

|

|

Excited QCD 2024 |

Jan 14-20, 2024 |

Nov 15, 2023 |

Exotic Hadrons or Heavy Ions (20+10) - Gavin Wilks

|

|

| EEMI 2024 | February 19-21, 2024 |

STAR fixed target - Sharang Rav Sharma |

||

|

QWG 2024 |

Feb 26-March 1, 2024 |

|

Recent results from STAR in heavy-ion collisions (15+5) - Nihar Sahoo Recent results from STAR in p+p collisions (15'+5') - Md. Nasim |

https://web.iisermohali.ac.in/dept/physics/QWG2024/index.html |

|

Moriond 2024 |

March 31-April 7, 2024 |

|

Recent Cold QCD Results from STAR - Ting Lin Recent Highlights from STAR BES Phase 2 - Dylan Neff |

|

| DIS 2024 | April 8-April 12, 2024 | Feb 9, 2024 |

Overview of STAR Spin and 3D Structure - Xiaoxuan Chu Recent heavy flavor measurements from RHIC (15'+5') - Veronika Prozorova |

|

| CPOD 2024 | May 20-24, 2024 | March 1, 2024 |

STAR Overview - Sooraj Radhakrishnan Net Proton in BES-II - Ashish Pandav Measurement of Deuteron-Lambda Correlation for STAR - Yu Hu |

|

| BOOST 2024 | July 29-August 2, 2024 | May 19, 2024 | Diptanil Roy | |

| SQM 2024 | June 3, 2024 | February 23, 2024 |

STAR Highlights (28'+2') - Qian Yang Joint abstract: Light Nuclei at BES-II - Yixuan Jin |

|

| Transversity 2024 | June 3-7, 2024 |

Transversity and TMDs - Bassam Aboona IFF at 200 and 500 GeV - Bernd Surrow |

||

| AUM 2024 | June 13-14, 2024 |

STAR Run 2024 Report - Jaroslav Adam STAR Highlights - Yicheng Feng STAR Open Heavy Flavor - Ondrej Lomicky STAR Heavy Quarkonia - Wei Zhang STAR Flow Highlights - Priyanshi Sinha STAR Vorticity - Xingrui Gou STAR Spin alignment - Diyu Shen STAR AI/ML - Hannah Harrison-Smith |

||

| CAARI-SNEAP 2024 | July 21-26, 2024 | May 2, 2024 |

Latest progress in high energy nuclear physics and the future Electron-Ion Collider opportunity - |

|

| INT 2024 | August 19-23, 2024 |

STAR heavy ions perspectives - Grigory Nigmatkulov |

||

| EXA/LEAP 2024 | August 26-30, 2024 | April 14, 2024 |

(Anti)hypertriton production - Hao Qiu |

https://www.oeaw.ac.at/smi/talks-and-events/exa/exa-leap-2024 |

| New Trends in High Energy Physics 2024 | September 2-5, 2024 | June 16, 2024 |

1. Collective properties of the nuclear matter at extreme conditions - Vipul Bairathi (direct invite) 2. Correlations and Fluctuations - Daniel Wielanek 3. Spin Physics - Ken Barish |

|

| ICNFP 2024 | August 26-September 4, 2024 | July 5, 2024 |

STAR Highlight (non-spin) - Barbara Trzeciak STAR Spin Highlight - Ting Lin STAR Correlations/fluctuations Overview - Yu Hu STAR FCV Overview - Yicheng Feng STAR HP Overview - Tanmay Pani STAR LFS/UPC Overview - STAR Cold-QCD Overview - Xilin Liang |

|

| Diffraction 2024 | September 8-14, 2024 | May 31, 2024 |

UPC+photonuclear RHIC results & prospects (20'+5') - Wangmei Zha PDFs at low/high-x & saturation - Zilong Chang |

|

| Hard Probes 2024 | September 22-27, 2024 | May 31, 2024 |

STAR Highlight Overview (30') - Isaac Mooney |

|

| NICA 2024 | November 26, 2024 |

Overview of femtoscopy results from STAR - Vinh Luong |

||

| Zimányi School 2024 | December 2-6, 2024 |

STAR Overview - Hanna Zbroszczyk |

||

| HF-HNC 2024 | December 6-11, 2024 |

Latest measurements of heavy flavor production - Dandan Shen |

||

| HEPNP 2025 | January 6-10, 2025 | December 20, 2024 |

STAR Overview - Sonia Kabana |

|

| GHP 2025 | March 14-16, 2025 |

(Plenary) Results from Beam Energy Scan II - Shusu Shi |

||

| QM 2025 | April 7, 2025 | Nov 15, 2024 |

(Plenary) STAR Highlight - Sooraj Radhakrishnan 877+914: Ankita Singh Nain (Panjab U) 785+875: Sharang Rav Sharma (IISER Tirupati) 817+809: Hongcan Li (CCNU/UCAS) 806+812: Weiguang Yuan (Tsinghua) 918: Jiaxuan Luo (USTC) |

|

| UPC2025 | June 9-13, 2025 | Feb 2, 2025 |

(Plenary) Experimental overview on UPC results at STAR - Chi Yang |

|

| Moriond 2025 | March 30-April 6, 2025 |

Heavy-ion Talk - Li-Ke Liu Cold-QCD Talk - Yixin Zhang |

||

| AUM 2025 | May 20-24, 2025 |

2025 STAR Run - Yu Hu STAR Highlights - Chenliang Jin HF Workshop - Kaifeng Shen |

||

| ICNFP 2025 | July 17-31, 2025 | May 31, 2025 |

"Non-spin" Highlight - Jakub Ceska CF Overview - Rutik Manikandhan LFS-UPC Overview - Spin Overview - Salvatore Fazio HP Overview - Collective Dynamics Overview - Vipul Bairathi (direct invite) Upgrades and Future - David Kapukchyan |

|

| Lomonosov 2025 | August 21-27, 2025 | April 15, 2025 |

Review talk - Vinh Luong |

|

| Initial Stages 2025 | September 7-12, 2025 | April 29, 2025 |

Overview talk - Andrew Tamis |

|

| PIC 2025 | October 20-23, 2025 | August 31, 2025 |

Heavy ion physics experiments other than the LHC - Ahmed Hamed |

|

| QWG 2025 | November 17-21, 2025 |

Recent STAR results on quarkonium production - |

||

| C3NT 2026 | March 22 - April 4, 2026 |

Correlations between hard jets and soft components - |

STAR Images

Here are some images from the STAR detector and collaboration.

- BNL image repository for the STAR Experiment (updated as new pictures appear)

- Display of STAR detector (2000, 2010, 2014, 2018 with EPD, 2019 with BES-II upgrade by hand, 2022_1, 2022_2)

- Repository of high-resolution figures for 2014 and before

- 2022 Event display with forward upgrade (pic1, pic2, pic3, pic4)

- 2014 Event display for gamma+jet

- 2014 Event display for di-jet events

- 2019 STAR Collaboration meeting picture (STAR Collaboration Meeting)

- 2018 STAR Collaboration picture (second organized collaboration picture in front of the detector)

- STAR Collaboration's group pictures (2014, 2015, ...)

- 10 Years group picture (2010)

- First STAR Collaboration image (also on BNL flickr) (2000)

- Virtual tour (2015)

- Animations / collision movies (2012)

- Event Images (2003)

- Photos from another day at STAR (2003)

- First Gold Beam-Beam Collision Events at RHIC at 100+100 GeV/c per beam recorded by STAR(2000)

- First Gold Beam-Beam Collision Events at RHIC at 30+30 GeV/c per beam recorded by STAR (2000)

- STAR Detector (2000)

- STAR construction picture of the day (June 17, 1999)

- TPC cosmic ray test images

- A library of STAR photos

- TPC Drift Simulation

Pixel related images

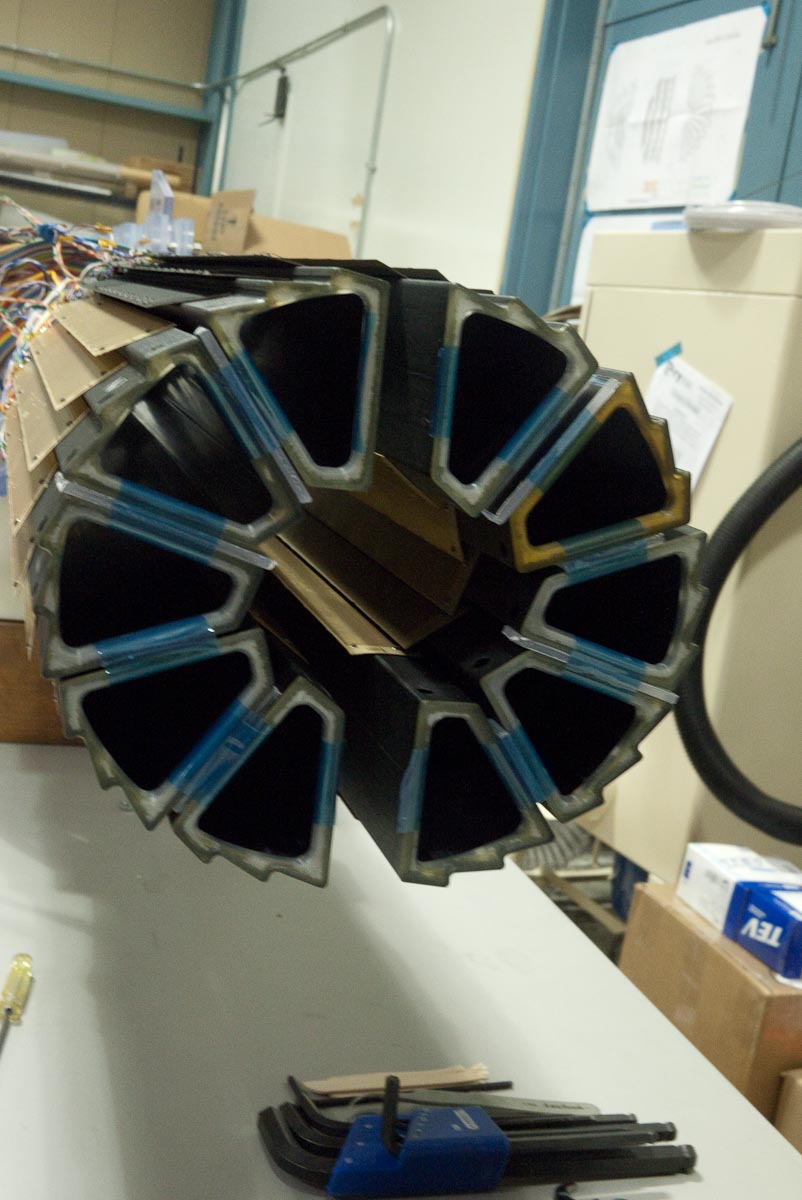

End view of pixel detector mockup

Participants

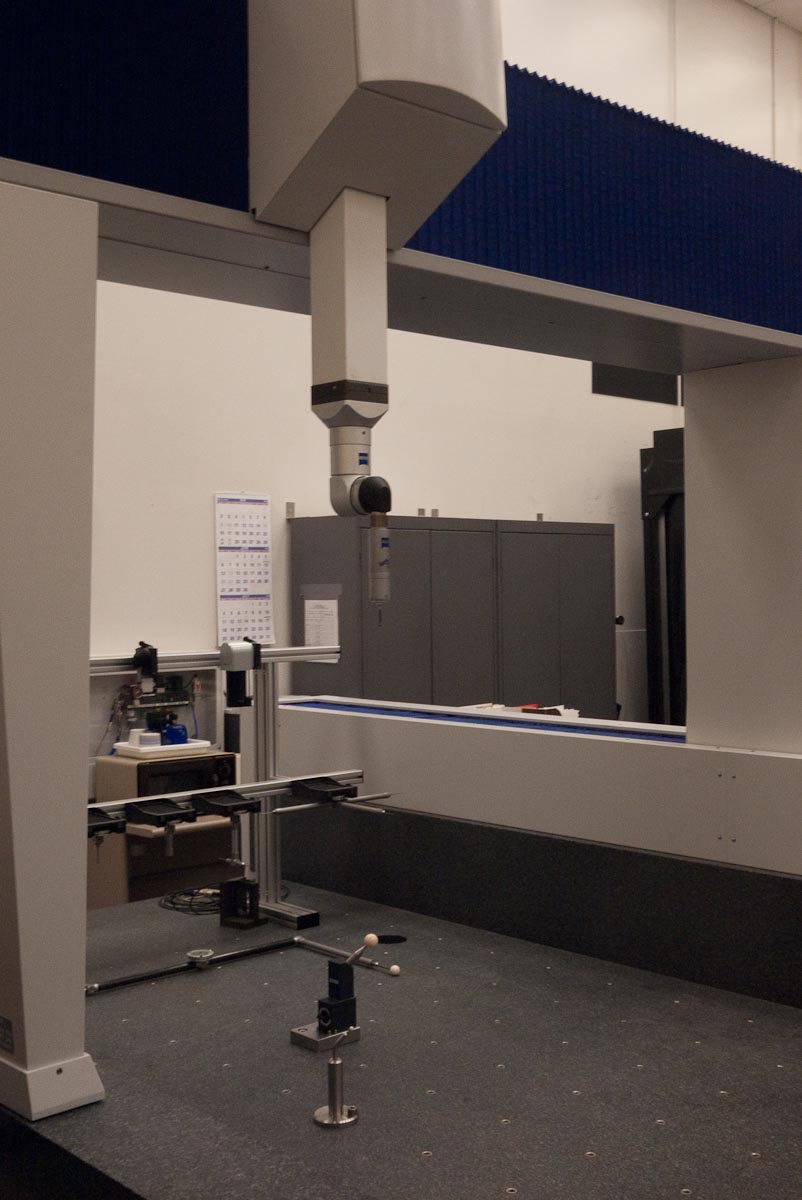

Photos of visit to LBNL metrology lab

Zeiss machine 1

Zeiss machine 2

SketchUp STAR

SketchUp STAR

3D models of the STAR detector, the RHIC accelerator complex, a prototype of an event display for the STAR experiment in SketchUp

Tai Sakuma

I drew the STAR detector and the RHIC accelerator complex in SketchUp. Also, I built a prototype of an event display for the STAR experiment by using SketchUp Ruby API. This page shows image files generated in this project.

The STAR detector

A cross sectional view of the STAR detector. This figure was used in Phys. Rev. D 86, 032006 (2012) (DOI: 10.1103/PhysRevD.86.032006 )

The same 3D model as in the previous figure but in perspective. This figure has been often used in presentations at scientific meetings.

RHIC

The RHIC accelerator complex

STAR detector subsystems

The Beam-Beam Counters (BBC)

The Barrel Electromagnetic Calorimeter (BEMC)

A BEMC Module

The geometry of the calorimeter towers in a BEMC module

The Time Projection Chamber (TPC)

The solenoidal magnet subsystem

Jet η and detector η

Jet η and detector η

Jets at three levels

The following three figures illustrate jets defined at the three different levels. These figures have been used several times in scientific meetings, e.g., the slide 4 of a presentation at APS 2010 .

A high-pT back-to-back dijet event at the detector level. The solid trajectories indicate TPC track measurements while the lego blocks indicate energy deposited in the BEMC towers.

A high-pT back-to-back dijet event at the hadron level

A high-pT back-to-back dijet event at the parton level

Jet Patch Trigger

The locations of the twelve jet patches of the BJP1 trigger in Run 6. The size of a jet patch is 1.0x1.0 in the η-φ coordinate system. Each jet patch contains 400 BEMC towers. This figure was used in Phys. Rev. D 86, 032006 (2012) (DOI: 10.1103/PhysRevD.86.032006 )

Event Display

I developed a prototype of an event display for the STAR experiment using the SketchUp Ruby API. This prototype was used in jet analyses, in particular, in establishing a jet definition and determining background events.

Two snapshots showing a prototype of an event display for the STAR experiment

STAR Technical Support Group

On this site you'll be able to:

- See the latest shutdown schedule for the STAR Detector

- Search STAR Mechanical Drawings Database and view the actual drawings in DWG format

- See photographs showing the latest installations in the STAR Detector

- Request a Drawing/ECN number (see online forms)

- Request for purchasing supplies and materials (see online forms)

Electronics Lab private network

Plan Overview:

In Room 1-232 (the "Electronics Lab"), our goal is to remove experimental test equipment and unsupported (or poorly supported) computer systems from the "public" network. These are likely sources of Cyber Security concerns and may benefit from a less volatile network environment than the campus network. To do this, we will create a private network in the Electronics Lab, with one maintained Linux node that will be dual-homed on the public network to act as a gateway to access the private network as needed. With the introduction of one (or more) Linux box(es), eventually we hope to retire the old Sun workstation completely.

The address space we will use is 192.168.140.0/255.255.255.0 (256 IP addresses). This is "registered" with ITD network operations as a STAR private network, so that if anything "escapes" from the private space into the campus network, they will know who to call.

Given the short list of anticipated devices (below), no name server is planned, nor other common network services such as DHCP (subject to change as needed).

Devices Using This Network:

- The Linux gateway system -- presley.star.bnl.gov (Scientific Linux 4.5) using 192.168.140.1

- One Sun Ultra E450 -- "svtbmonitor" (Solaris 8) using 192.168.140.2

- One serial console server using 192.168.140.3

- Several (~4-5) rack-mounted MVME or similar devices at any time. An initial set have been assigned 192.168.140.11-15. A set of working sample boot parameters are included in a file attached to this page (see the links in the Attachments section below).

- Update August 4, 2009: Six more processors are being added to the network. The name trgfe6 through trgfe11 have been assigned 192.168.140.16-21 (ie. added to /etc/hosts on presley and svtbmonitor).

- Several Windows PC's, including laptops that may come and go (which will require manual configuration -- a small inconvenience that can perhaps be overcome with networking "profile" software that stores multiple configurations on the node -- to be investigated, such as http://www.eusing.com/ipswitch/free_ip_switcher.htm )

Status:

A Linux box named presley.star.bnl.gov is configured on the public network, with a second NIC using 192.168.140.1 to act as a manual gateway node as needed. It is in the south-west corner of the lab. An account named daqlab has been created (contact Wayne or Danny for the password if appropriate)

For the effort to replace svtbmonitor, the home directory of svtbmonitor's testlab account have been copied over to presley in /home/svtbmonitor/testlab.

Danny and Phil identified a handful of files from svtbmonitor as important so far:

- emc.tcl

- smd_qa.tcl

- tower_qaodt.tcl

- grab (compiled C code to open a window and connect to a specified node on the serial console server)

- grab.bag (used by grab to "resolve" common names into ports on the console server)

The original versions of these files are all in their original (relative) paths, and modified versions for presley and the current networking setup were created and put in /home/daqlab/. The Tcl scripts were modified to account for the slightly different environment on presley and were demonstrated to work (at least the basics - still needs testing to confirm full functionality). The "grab" executable had to be recompiled from source (simple enough - the required source code consists of "grab.c" and only required a single minor change for the new environment on presley). So far so good there.

Two small "desktop" 10/100 Mbps switches have been connected to each other to serve as the "backbone":

- one is on a shelf on the west wall

- the other is on a shelf on the north wall

This is easy to expand with one or two 16-port switches if needed (well, it actually is needed as I write this...). (In fact, these switches have been rather fluid in the first months, coming and going and being swapped for others, all at a slight inconvenience to those of us trying to work with them...)

[Feb. 19, 2009 update -- the physical layout of switches and cables has been switched around many times since this description and I don't know the current state.]

The old networking (not to mention the serial lines) is a mess of cables, old hubs and switches that I plan to ignore as long as possible, though most should be removed if it isn't nailed down. [Feb. 19, 2009 - some clean up appears to have been done by the folks working in the lab.]

To Be Done, moving from svtbmonitor to presley (last updated, Feb 19, 2009):

Towards this end we've identified the following remaining tasks:

Configure backups of the home directories on presley as another safety net for the critical content from svtbmonitor that has been transferred over.

Jack Engelage and Hank Crawford have transferred a bunch of files from svtbmonitor to presley [are they done?]. A "trigger" group was created containing Jack, Chris and Hank. They have /home/trigger to share amongst themselves.

The biggies:

VxWorks compilers for Linux and booting the VME processors from presley. [Feb 19, 2009 update: Yury Gorbunov (and Jack Engelage?) have made successful boots of trgfe3 from presley via FTP, so it looks like svtbmonitor has a chance of being retired at some point. Sample boot parameters are attached]

Ralph test area

This is my first test page.

Shutdown schedule

FY04

Project Start Date: Fri 1/2/04Project Finish Date: Mon 10/25/04

STAR FY04 v3 Shutdown

| ID | Name | Duration | Start_Date | Finish_Date | Resource_Names | Predecessors | Successors |

|---|---|---|---|---|---|---|---|

| 1 | STAR Detector Operations | 211.35 days | Mon 1/5/04 | Mon 10/25/04 | |||

| 2 | Physics Run FY04 | 96 days | Mon 1/5/04 | Sat 5/15/04 | 5FF | ||

| 3 | Start Shutdown Activities | 116.35 days | Sat 5/15/04 | Mon 10/25/04 | |||

| 4 | Move Detector into AB | 14.6 days | Sat 5/15/04 | Thu 6/3/04 | |||

| 5 | Purge TPC Gas | 1 day | Sat 5/15/04 | Sat 5/15/04 | 2FF | 6SS | |

| 6 | Subsystem Testing | 6 days | Sat 5/15/04 | Fri 5/21/04 | 5SS | 7 | |

| 7 | Retract PMD Detector | 0.1 days | Mon 5/24/04 | Mon 5/24/04 | Mech.Tech. | 6 | 8 |

| 8 | Remove East BBC Detector | 0.25 days | Mon 5/24/04 | Mon 5/24/04 | Mech.Tech. | 7 | 9 |

| 9 | Retract East Pole Tip | 0.5 days | Mon 5/24/04 | Mon 5/24/04 | Mech.Tech.[2] | 8 | 10FF |

| 10 | Install Scaffold | 0.25 days | Mon 5/24/04 | Mon 5/24/04 | Carpenter[2] | 9FF | 12,11 |

| 11 | Remove Shield Wall | 2.5 days | Mon 5/24/04 | Thu 5/27/04 | Riggers[3] | 10 | 26,23,16SS,18,135 |

| 12 | Remove West Pole Tip Utilities | 0.25 days | Mon 5/24/04 | Tue 5/25/04 | Mech.Tech. | 10 | 13 |

| 13 | Remove West BBC Detector | 0.25 days | Tue 5/25/04 | Tue 5/25/04 | Mech.Tech. | 12 | 14 |

| 14 | Retract West Pole Tip | 0.5 days | Tue 5/25/04 | Tue 5/25/04 | Mech.Tech.[2] | 13 | 15FF |

| 15 | Install Scaffold | 0.13 days | Tue 5/25/04 | Tue 5/25/04 | Carpenter[2] | 14FF | |

| 16 | Disconnect Electrical Power | 0.5 days | Mon 5/24/04 | Tue 5/25/04 | Electrician[2] | 11SS | 18,17 |

| 17 | Disconnect Magnet Power | 0.5 days | Tue 5/25/04 | Tue 5/25/04 | Elect.Tech.[2] | 16 | 18 |

| 18 | Remove Buss Bridge | 0.25 days | Thu 5/27/04 | Thu 5/27/04 | Mech.Tech.[2] | 16,17,11 | 19,21 |

| 19 | Disconnect Platform MCWS | 0.5 days | Thu 5/27/04 | Fri 5/28/04 | Mech.Tech. | 18 | 20 |

| 20 | Disconnect Subsystems Utilities | 1 day | Fri 5/28/04 | Mon 5/31/04 | Mech.Tech. | 19 | 26 |

| 21 | Remove Platform Bridge | 0.25 days | Thu 5/27/04 | Thu 5/27/04 | Mech.Tech.[2] | 18 | 26,22 |

| 22 | Remove South Platform Stairs | 0.25 days | Thu 5/27/04 | Fri 5/28/04 | Mech.Tech.[2] | 21 | 26,24 |

| 23 | Disconnect RHIC Vacuum Pipe | 0.5 days | Thu 5/27/04 | Thu 5/27/04 | Mech.Tech. | 11 | |

| 24 | Remove Seismic Anchors | 0.25 days | Fri 5/28/04 | Fri 5/28/04 | Mech.Tech.[2] | 22 | 25 |

| 25 | Disconnect Magnet LCW | 0.25 days | Fri 5/28/04 | Fri 5/28/04 | Mech.Tech.[2] | 24 | 26 |

| 26 | Roll Detector into AB | 1 day | Mon 5/31/04 | Tue 6/1/04 | Mech.Tech.[2] | 11,21,22,25,20 | 27 |

| 27 | Blow-out Magnet Coils | 0.5 days | Tue 6/1/04 | Tue 6/1/04 | Mech.Tech.[2] | 26 | 28 |

| 28 | Connect South Platform Stairs | 0.5 days | Tue 6/1/04 | Wed 6/2/04 | Mech.Tech.[2] | 27 | 29 |

| 29 | Connect Platform Bridge | 0.5 days | Wed 6/2/04 | Wed 6/2/04 | Mech.Tech.[2] | 28 | 31,30 |

| 30 | Connect Seismic Anchors | 0.5 days | Wed 6/2/04 | Thu 6/3/04 | Mech.Tech. | 29 | |

| 31 | Install Buss Bridge | 0.5 days | Wed 6/2/04 | Thu 6/3/04 | Mech.Tech.[2] | 29 | 32 |

| 32 | Connect Electrical Power | 0.5 days | Thu 6/3/04 | Thu 6/3/04 | Electrician[2] | 31 | 33SS |

| 33 | Connect Subsystems Utilities | 0.5 days | Thu 6/3/04 | Thu 6/3/04 | Mech.Tech. | 32SS | 34SS |

| 34 | Connect Platform MCWS | 0.5 days | Thu 6/3/04 | Thu 6/3/04 | Mech.Tech. | 33SS | 35 |

| 35 | Detector In AB | 0 days | Thu 6/3/04 | Thu 6/3/04 | 34 | 37,117,121 | |

| 36 | Remove East & West FTPC | 13.75 days | Thu 6/3/04 | Wed 6/23/04 | |||

| 37 | Remove E/W IFC Air Manifolds | 0.25 days | Thu 6/3/04 | Thu 6/3/04 | Mech.Tech. | 35 | 38 |

| 38 | Remove East & West Scaffold | 0.5 days | Thu 6/3/04 | Fri 6/4/04 | Carpenter[2] | 37 | 39 |

| 39 | Install West Platform & Rails | 1 day | Fri 6/4/04 | Mon 6/7/04 | Carpenter[2] | 38 | 40,41 |

| 40 | Remove West FTPC | 0.5 days | Mon 6/7/04 | Mon 6/7/04 | Mech.Tech. | 39 | 45 |

| 41 | Rig Installation Frame into AB | 0.5 days | Mon 6/7/04 | Mon 6/7/04 | Riggers[3] | 39 | 42SS |

| 42 | Install East Installation Frame | 0.5 days | Mon 6/7/04 | Mon 6/7/04 | Mech.Tech.[2] | 41SS | 43 |

| 43 | Install East Rails | 1 day | Mon 6/7/04 | Tue 6/8/04 | Mech.Tech.[2] | 42 | 44 |

| 44 | Remove East FTPC | 0.5 days | Tue 6/8/04 | Wed 6/9/04 | Mech.Tech.[2] | 43 | 45 |

| 45 | East & West FTPC's Removed | 0 days | Wed 6/9/04 | Wed 6/9/04 | 40,44 | 46,48 | |

| 46 | Maintenance on FTPC's | 10 days | Wed 6/9/04 | Wed 6/23/04 | Electronic Tech | 45 | |

| 47 | Remove Cone | 11.5 days | Wed 6/9/04 | Thu 6/24/04 | |||

| 48 | Disconnect Utilities | 4 days | Wed 6/9/04 | Tue 6/15/04 | Mech.Tech. | 45 | 49 |

| 49 | Remove Cone from IFC | 0.5 days | Tue 6/15/04 | Tue 6/15/04 | Mech.Tech. | 48 | 76,126 |

| 50 | Remove East Installation Frame | 1 day | Tue 6/22/04 | Wed 6/23/04 | Mech.Tech.[2] | 76,126 | 51FF |

| 51 | Remove Installation Frame from AB | 0.5 days | Wed 6/23/04 | Wed 6/23/04 | Riggers[3] | 50FF | 52 |

| 52 | Remove Installation Frame West | 1 day | Wed 6/23/04 | Thu 6/24/04 | Carpenter[2] | 51 | 53 |

| 53 | Cone Removed | 0 days | Thu 6/24/04 | Thu 6/24/04 | 52 | 57 | |

| 54 | Install East BEMC Modules | 47.5 days | Mon 6/14/04 | Wed 8/18/04 | |||

| 55 | (30) Modules Delivered | 4 days | Mon 6/14/04 | Fri 6/18/04 | Riggers[3] | 57FF-5 days | 56FF |

| 56 | (30) Modules Staged in AB | 4 days | Mon 6/14/04 | Fri 6/18/04 | Mech.Tech.[2] | 55FF | |

| 57 | Install East & West Scaffold | 0.5 days | Thu 6/24/04 | Fri 6/25/04 | Carpenter[2] | 53 | 58,55FF-5 days |

| 58 | Remove East Side Cables & Tray | 3 days | Fri 6/25/04 | Wed 6/30/04 | Mech.Tech.[2] | 57 | 59 |

| 59 | Erect Installation Fixture S.E. Upper | 0.5 days | Wed 6/30/04 | Wed 6/30/04 | Mech.Tech.[2] | 58 | 60 |

| 60 | Remove S.E. TPC Support Arm | 0.25 days | Wed 6/30/04 | Thu 7/1/04 | Mech.Tech.[2] | 59 | 61 |

| 61 | Install Modules 106-103 | 4 days | Thu 7/1/04 | Wed 7/7/04 | Mech.Tech.[2] | 60 | 62 |

| 62 | Erect Installation Fixture S.E. Lower | 0.5 days | Wed 7/7/04 | Wed 7/7/04 | Mech.Tech.[2] | 61 | 63 |

| 63 | Install Modules 102-89 | 14 days | Wed 7/7/04 | Tue 7/27/04 | Mech.Tech.[2] | 62 | 64 |

| 64 | Install S.E. TPC Support Arm | 0.5 days | Tue 7/27/04 | Wed 7/28/04 | Mech.Tech.[2] | 63 | 65 |

| 65 | Erect Installation Fixture N.E. Lower | 0.5 days | Wed 7/28/04 | Wed 7/28/04 | Mech.Tech.[2] | 64 | 66 |

| 66 | Install Modules 77-88 | 12 days | Wed 7/28/04 | Fri 8/13/04 | Mech.Tech.[2] | 65 | 67 |

| 67 | Remove Installation Fixture | 0.25 days | Fri 8/13/04 | Fri 8/13/04 | Mech.Tech.[2] | 66 | 68 |

| 68 | Install East Side Cables & Tray | 3 days | Fri 8/13/04 | Wed 8/18/04 | Mech.Tech.[2] | 67 | 69 |

| 69 | East BEMC Installation Complete | 0 days | Wed 8/18/04 | Wed 8/18/04 | 68 | 82,72 | |

| 70 | Install BEMC PMT Boxes | 35 days | Wed 7/14/04 | Wed 9/1/04 | |||

| 71 | Install 208 VAC Power | 15 days | Wed 7/14/04 | Wed 8/4/04 | Electrician[2] | 72SF-10 days | |

| 72 | Install PMT Boxes | 10 days | Wed 8/18/04 | Wed 9/1/04 | Mech.Tech.[2] | 69 | 73FF,71SF-10 days |

| 73 | Install Electronics | 20 days | Wed 8/4/04 | Wed 9/1/04 | Electronic Tech | 72FF | 74 |

| 74 | PMT Box Installation Complete | 0 days | Wed 9/1/04 | Wed 9/1/04 | 73 | 144 | |

| 75 | Install SSD | 20.5 days | Tue 6/15/04 | Wed 7/14/04 | |||

| 76 | Rig Cone to Cleanroom Roof | 0.5 days | Tue 6/15/04 | Wed 6/16/04 | Mech.Tech. | 49 | 77,50 |

| 77 | Install SSD on Cone | 20 days | Wed 6/16/04 | Wed 7/14/04 | Mech.Tech. | 76 | 78SS,80 |

| 78 | Install LV Crate & Cables | 5 days | Wed 6/16/04 | Wed 6/23/04 | Electronic Tech | 77SS | 79 |

| 79 | Install RDO Boxes | 5 days | Wed 6/23/04 | Wed 6/30/04 | Electronic Tech | 78 | |

| 80 | SSD Installed on Cone | 0 days | Wed 7/14/04 | Wed 7/14/04 | 77 | 85 | |

| 81 | Install Cone | 20.5 days | Wed 8/18/04 | Thu 9/16/04 | |||

| 82 | Rig Installation Frame into AB | 0.5 days | Wed 8/18/04 | Thu 8/19/04 | Riggers[3] | 69 | 83SS |

| 83 | Install East Installation Frame | 0.5 days | Wed 8/18/04 | Thu 8/19/04 | Mech.Tech.[2] | 82SS | 84 |

| 84 | Install Tables & Rails | 0.5 days | Thu 8/19/04 | Thu 8/19/04 | Mech.Tech. | 83 | 85 |

| 85 | Rig Cone to Rails | 0.5 days | Thu 8/19/04 | Fri 8/20/04 | Mech.Tech.[2] | 84,80 | 86 |

| 86 | Survey SSD to SVT | 2 days | Fri 8/20/04 | Tue 8/24/04 | Surveyors[2] | 85 | 87 |

| 87 | Complete SSD Installation | 2 days | Tue 8/24/04 | Thu 8/26/04 | Mech.Tech. | 86 | 88 |

| 88 | Test Utility Connections | 1 day | Thu 8/26/04 | Fri 8/27/04 | Electronic Tech | 87 | 89FF |

| 89 | Clean TPC IFC | 1 day | Thu 8/26/04 | Fri 8/27/04 | Mech.Tech. | 88FF | 90 |

| 90 | Install Cone in Detector | 0.5 days | Fri 8/27/04 | Fri 8/27/04 | Mech.Tech. | 89 | 91 |

| 91 | Test IFC High Voltage | 0.5 days | Fri 8/27/04 | Mon 8/30/04 | Electronic Tech | 90 | 92 |

| 92 | Survey TPC to Magnet | 2 days | Mon 8/30/04 | Wed 9/1/04 | Surveyors[2] | 91 | 93 |

| 93 | Survey SVT to TPC | 2 days | Wed 9/1/04 | Fri 9/3/04 | Surveyors[2] | 92 | 94 |

| 94 | Install East Partitions | 0.5 days | Fri 9/3/04 | Fri 9/3/04 | Mech.Tech. | 93 | 110 |

| 95 | Connect Water Lines | 0.5 days | Mon 9/6/04 | Tue 9/7/04 | Mech.Tech. | 110 | 96 |

| 96 | Connect RDO Cables | 1 day | Tue 9/7/04 | Wed 9/8/04 | Electronic Tech | 95 | 97 |

| 97 | Remove East Rails from IFC | 0.5 days | Wed 9/8/04 | Wed 9/8/04 | Mech.Tech.[2] | 96 | 98 |

| 98 | Remove East Installation Frame | 0.5 days | Wed 9/8/04 | Thu 9/9/04 | Mech.Tech.[2] | 97 | 99FF |

| 99 | Remove Installation Frame from AB | 0.25 days | Thu 9/9/04 | Thu 9/9/04 | Riggers[3] | 98FF | 100 |

| 100 | East Side Complete | 0 days | Thu 9/9/04 | Thu 9/9/04 | 99 | 107,101 | |

| 101 | Install West Partitions | 0.5 days | Thu 9/9/04 | Thu 9/9/04 | Mech.Tech. | 100 | 112 |

| 102 | Connect Water Lines | 0.5 days | Mon 9/13/04 | Mon 9/13/04 | Mech.Tech. | 114 | 103 |

| 103 | Connect RDO Cables | 1 day | Mon 9/13/04 | Tue 9/14/04 | Electronic Tech | 102 | 104 |

| 104 | Remove West Rails from IFC | 0.5 days | Tue 9/14/04 | Wed 9/15/04 | Mech.Tech. | 103 | 105 |

| 105 | Remove Installation Frame West | 1 day | Wed 9/15/04 | Thu 9/16/04 | Carpenter[2] | 104 | 106 |

| 106 | West Side Complete | 0 days | Thu 9/16/04 | Thu 9/16/04 | 105 | 107 | |

| 107 | Cone Installation Complete | 0 days | Thu 9/16/04 | Thu 9/16/04 | 100,106 | 144 | |

| 108 | Install FTPC East & West in AB | 12.5 days | Wed 8/25/04 | Mon 9/13/04 | |||

| 109 | Run & Test N. Platform | 5 days | Wed 8/25/04 | Wed 9/1/04 | Electronic Tech | 110SF-2 days | |

| 110 | Install East FTPC in Detector | 1 day | Fri 9/3/04 | Mon 9/6/04 | Mech.Tech.[2] | 94 | 115,109SF-2 days,95 |

| 111 | Run & Test N. Platform | 5 days | Wed 9/1/04 | Wed 9/8/04 | Electronic Tech | 114SF-2 days | |

| 112 | Remove West Scaffold | 0.25 days | Thu 9/9/04 | Fri 9/10/04 | Carpenter[2] | 101 | 113 |

| 113 | Install West Platform & Rails | 0.25 days | Fri 9/10/04 | Fri 9/10/04 | Carpenter[2] | 112 | 114 |

| 114 | Install West FTPC in Detector | 1 day | Fri 9/10/04 | Mon 9/13/04 | Mech.Tech.[2] | 113 | 115,111SF-2 days,102 |

| 115 | FTPC Installed in AB | 0 days | Mon 9/13/04 | Mon 9/13/04 | 114,110 | ||

| 116 | PMD Maintenance | 40 days | Thu 6/3/04 | Thu 7/29/04 | |||

| 117 | Subsystem Maintenance | 30 days | Thu 6/3/04 | Thu 7/15/04 | 35 | 118 | |

| 118 | Detector Commisioning | 10 days | Thu 7/15/04 | Thu 7/29/04 | 117 | 119 | |

| 119 | PMD Maintenance Complete | 0 days | Thu 7/29/04 | Thu 7/29/04 | 118 | ||

| 120 | EEMC Maintenance | 90 days | Thu 6/3/04 | Thu 10/7/04 | |||

| 121 | Maintenance | 50 days | Thu 6/3/04 | Thu 8/12/04 | 35 | 122SS | |

| 122 | MAPMT Installation | 80 days | Thu 6/3/04 | Thu 9/23/04 | Mech.Tech.[2] | 121SS | 123 |

| 123 | Subsystem Testing | 10 days | Thu 9/23/04 | Thu 10/7/04 | 122 | 124 | |

| 124 | EEMC Maintenance Complete | 0 days | Thu 10/7/04 | Thu 10/7/04 | 123 | ||

| 125 | TPC Maintenance | 50 days | Tue 6/15/04 | Tue 8/24/04 | |||

| 126 | Repair IFC Strip Short | 5 days | Tue 6/15/04 | Tue 6/22/04 | Mech.Tech. | 49 | 127,50,131SS |

| 127 | Modify Resistor Chain | 5 days | Tue 6/22/04 | Tue 6/29/04 | Mech.Tech. | 126 | 128 |

| 128 | FEE & RDO Maintenance | 40 days | Tue 6/29/04 | Tue 8/24/04 | Electronic Tech | 127 | 129 |

| 129 | TPC Maintenance Complete | 0 days | Tue 8/24/04 | Tue 8/24/04 | 128 | ||

| 130 | TOF Maintenance | 15 days | Tue 6/15/04 | Tue 7/6/04 | |||

| 131 | Tray Maintenance | 10 days | Tue 6/15/04 | Tue 6/29/04 | 126SS | 132 | |

| 132 | Detector Testing | 5 days | Tue 6/29/04 | Tue 7/6/04 | 131 | 133 | |

| 133 | TOFp Maintenance Complete | 0 days | Tue 7/6/04 | Tue 7/6/04 | 132 | ||

| 134 | FPD Installation | 20 days | Thu 5/27/04 | Thu 6/24/04 | |||

| 135 | Install West Detectors | 10 days | Thu 5/27/04 | Thu 6/10/04 | Mech.Tech. | 11 | 136 |

| 136 | Install N. E. Stand for 20-cm Travel | 5 days | Thu 6/10/04 | Thu 6/17/04 | Mech.Tech.[2] | 135 | 137 |

| 137 | Detector Commisioning | 5 days | Thu 6/17/04 | Thu 6/24/04 | 136 | 138 | |

| 138 | FPD Installation Complete | 0 days | Thu 6/24/04 | Thu 6/24/04 | 137 | 140 | |

| 139 | BBC Maintenance | 15 days | Thu 6/24/04 | Thu 7/15/04 | |||

| 140 | Subsystem Maintenance | 10 days | Thu 6/24/04 | Thu 7/8/04 | 138 | 141 | |

| 141 | Detector Commisioning | 5 days | Thu 7/8/04 | Thu 7/15/04 | 140 | 142 | |

| 142 | BBC Maintenance Complete | 0 days | Thu 7/15/04 | Thu 7/15/04 | 141 | ||

| 143 | Move Detector into WAH | 27 days | Thu 9/16/04 | Mon 10/25/04 | |||

| 144 | Disconnect Electrical Power | 0.5 days | Thu 9/16/04 | Thu 9/16/04 | Electrician[2] | 107,74 | 147,145SS,146SS |

| 145 | Disconnect Platform MCWS | 0.5 days | Thu 9/16/04 | Thu 9/16/04 | Mech.Tech. | 144SS | |

| 146 | Disconnect TPC Utilities | 0.5 days | Thu 9/16/04 | Thu 9/16/04 | Mech.Tech. | 144SS | |

| 147 | Remove Buss Bridge | 0.5 days | Thu 9/16/04 | Fri 9/17/04 | Mech.Tech.[2] | 144 | 148 |

| 148 | Remove Platform Bridge | 0.5 days | Fri 9/17/04 | Fri 9/17/04 | Mech.Tech.[2] | 147 | 149 |

| 149 | Remove South Platform Stairs | 1 day | Fri 9/17/04 | Mon 9/20/04 | Mech.Tech.[2] | 148 | 150 |

| 150 | Disconnect Seismic Anchors | 0.5 days | Mon 9/20/04 | Tue 9/21/04 | Mech.Tech.[2] | 149 | 151SS |

| 151 | Lower Detector to Rails | 0.5 days | Mon 9/20/04 | Tue 9/21/04 | Mech.Tech.[2] | 150SS | 152 |

| 152 | Roll Detector into WAH | 1.5 days | Tue 9/21/04 | Wed 9/22/04 | Mech.Tech.[2] | 151 | 153,154 |

| 153 | Connect Magnet LCW | 1 day | Wed 9/22/04 | Thu 9/23/04 | Mech.Tech.[2] | 152 | |

| 154 | Raise/Level Detector @ IR | 0.5 days | Wed 9/22/04 | Thu 9/23/04 | Mech.Tech.[2] | 152 | 155FF,163 |

| 155 | Connect Seismic Anchors | 0.5 days | Wed 9/22/04 | Thu 9/23/04 | Mech.Tech.[2] | 154FF | 159,156 |

| 156 | Install Buss Bridge | 0.5 days | Thu 9/23/04 | Thu 9/23/04 | Mech.Tech.[2] | 155 | 157,160 |

| 157 | Connect Electrical Power | 1 day | Thu 9/23/04 | Fri 9/24/04 | Electrician[2] | 156 | 158 |

| 158 | Connect Magnet Power | 1 day | Fri 9/24/04 | Mon 9/27/04 | Elect.Tech.[2] | 157 | |

| 159 | Connect All Subsystem Utilities | 2 days | Thu 9/23/04 | Mon 9/27/04 | Mech.Tech.[2] | 155 | 168,166 |

| 160 | Connect Platform MCWS | 0.5 days | Thu 9/23/04 | Fri 9/24/04 | Mech.Tech. | 156 | 168,161 |

| 161 | Connect South Platform Stairs | 1 day | Fri 9/24/04 | Mon 9/27/04 | Mech.Tech.[2] | 160 | 162 |

| 162 | Connect Platform Bridge | 0.5 days | Mon 9/27/04 | Mon 9/27/04 | Mech.Tech.[2] | 161 | 167 |

| 163 | Connect Vacuum Pipe & Supports | 0.5 days | Thu 9/23/04 | Thu 9/23/04 | Mech.Tech.[2] | 154 | 164 |

| 164 | Vacuum Pipe Bake-out | 5 days | Thu 9/23/04 | Thu 9/30/04 | Mech.Tech.[2] | 163 | 165 |

| 165 | Survey Beam Pipe & Magnet | 4 days | Thu 9/30/04 | Wed 10/6/04 | Surveyors[2] | 164 | |

| 166 | Detector Safety Certification | 2 days | Mon 9/27/04 | Wed 9/29/04 | 159 | ||

| 167 | Install Shield Wall | 3 days | Mon 9/27/04 | Thu 9/30/04 | Riggers[3] | 162 | 171 |

| 168 | Subsystems testing in WAH | 20 days | Mon 9/27/04 | Mon 10/25/04 | 160,159 | 169SS+5 days | |

| 169 | Install Pole Tips | 2 days | Mon 10/4/04 | Wed 10/6/04 | Mech.Tech.[2] | 168SS+5 days | 170 |

| 170 | Install BBC Detectors | 1 day | Wed 10/6/04 | Thu 10/7/04 | Mech.Tech.[2] | 169 | 171 |

| 171 | Shutdown Activities Complete | 0 days | Thu 10/7/04 | Thu 10/7/04 | 167,170 |

FY06

STAR FY06 Shutdown Schedule

Project Start Date: 6/26/06

Project Finish Date: 10/27/06

STAR FY06 v5 Shutdown

| ID | WBS | Name | Duration | Start_Date | Finish_Date | Resource_Names | Predecessors | Successors |

|---|---|---|---|---|---|---|---|---|

| 1 | 1 | STAR Detector FY06 Shutdown | 87 days | 6/26/06 | 10/27/06 | |||

| 2 | 1.1 | Disconnect Detector Utilities | 10.75 days | 6/26/06 | 7/12/06 | |||

| 3 | 1.1.1 | Begin Shutdown Activities | 0 days | 6/26/06 | 6/26/06 | 4SS,114SS | ||

| 4 | 1.1.2 | Purge TPC Gas | 1 day | 6/26/06 | 6/26/06 | 3SS | ||

| 5 | 1.1.3 | Remove West BBC Detector | 0.25 days | 7/5/06 | 7/5/06 | Mech.Tech. | 114 | 6,103 |

| 6 | 1.1.4 | Disconnect West Pole Tip Utilities | 0.5 days | 7/5/06 | 7/5/06 | Mech.Tech.[2] | 5 | 7 |

| 7 | 1.1.5 | Retract West Pole Tip | 0.5 days | 7/5/06 | 7/6/06 | Mech.Tech.[2] | 6 | 8FF |

| 8 | 1.1.6 | Install Scaffold | 0.25 days | 7/6/06 | 7/6/06 | Carpenter[2] | 7FF | 9 |

| 9 | 1.1.7 | Remove East BBC Detector | 0.25 days | 7/6/06 | 7/6/06 | Mech.Tech. | 8 | 10,103 |

| 10 | 1.1.8 | Disconnect East Pole Tip Utilities | 0.25 days | 7/6/06 | 7/6/06 | Elect.Tech.[2] | 9 | 11 |

| 11 | 1.1.9 | Retract East Pole Tip | 0.5 days | 7/6/06 | 7/7/06 | Mech.Tech.[2] | 10 | 12FF,15,13 |

| 12 | 1.1.10 | Install Scaffold | 0.25 days | 7/7/06 | 7/7/06 | Carpenter[2] | 11FF | 19 |

| 13 | 1.1.11 | Disconnect Electrical Power | 0.5 days | 7/7/06 | 7/7/06 | Electrician[2] | 11 | 15,14,17 |

| 14 | 1.1.12 | Disconnect Magnet Power | 0.5 days | 7/7/06 | 7/10/06 | Elect.Tech.[2] | 13 | 15 |

| 15 | 1.1.13 | Remove Buss Bridge | 0.25 days | 7/10/06 | 7/10/06 | Mech.Tech.[2] | 13,14,11 | 16 |

| 16 | 1.1.14 | Disconnect Platform MCWS | 0.5 days | 7/10/06 | 7/10/06 | Mech.Tech.[2] | 15 | 18 |

| 17 | 1.1.15 | Disconnect Subsystems Utilities | 2 days | 7/7/06 | 7/11/06 | Mech.Tech. | 13 | |

| 18 | 1.1.16 | Remove South Platform Stairs | 0.25 days | 7/11/06 | 7/11/06 | Mech.Tech.[2] | 16 | 21 |

| 19 | 1.1.17 | Remove pVPD Detectors | 0.25 days | 7/7/06 | 7/7/06 | Mech.Tech.[2] | 12 | 20 |

| 20 | 1.1.18 | Disconnect RHIC Vacuum Pipe | 3 days | 7/7/06 | 7/12/06 | Mech.Tech.[2] | 19 | 24 |

| 21 | 1.1.19 | Remove Seismic Anchors | 0.25 days | 7/11/06 | 7/11/06 | Mech.Tech.[2] | 18 | 22 |

| 22 | 1.1.20 | Disconnect Magnet Water | 0.25 days | 7/11/06 | 7/11/06 | Mech.Tech.[2] | 21 | 23 |

| 23 | 1.1.21 | Blow-out Magnet Coils | 1 day | 7/11/06 | 7/12/06 | Mech.Tech.[2] | 22 | 24 |

| 24 | 1.1.22 | Detector Utilities Disconnected | 0 days | 7/12/06 | 7/12/06 | 23,20 | 26,57 | |

| 25 | 1.2 | Hydraulic System Testing | 6.5 days | 7/12/06 | 7/21/06 | |||

| 26 | 1.2.1 | Run & Test Hydraulics | 5 days | 7/12/06 | 7/19/06 | Mech.Tech. | 24 | 27FF |

| 27 | 1.2.2 | Position Detector on Beamline | 0.5 days | 7/19/06 | 7/19/06 | Mech.Tech. | 26FF | 28SS |

| 28 | 1.2.3 | Survey Detector on Beamline | 2 days | 7/19/06 | 7/21/06 | Surveyors[2] | 27SS | 29 |

| 29 | 1.2.4 | Hydraulics Tests Complete | 0 days | 7/21/06 | 7/21/06 | 28 | 31,67,132,85 | |

| 30 | 1.3 | Remove Hilman Rollers | 5 days | 7/31/06 | 8/4/06 | |||

| 31 | 1.3.1 | Remove Roller Assemblies | 5 days | 7/31/06 | 8/4/06 | Mech.Tech.[2] | 29,123 | 32FF |

| 32 | 1.3.2 | Remove Roller Assemblies | 2 days | 8/3/06 | 8/4/06 | Riggers[3] | 31FF | 33FF |

| 33 | 1.3.3 | Crate & Ship Roller Assemblies | 1 day | 8/4/06 | 8/4/06 | Riggers[2],Carpenter[2] | 32FF | 34FF |

| 34 | 1.3.4 | Roller Assembly Removal Complete | 0 days | 8/4/06 | 8/4/06 | 33FF | ||

| 35 | 1.4 | Remove West FPD++ Detector | 23.5 days | 7/5/06 | 8/7/06 | |||

| 36 | 1.4.1 | Disconnect Cabling | 5 days | 7/5/06 | 7/11/06 | Mech.Tech. | 114 | 37SS |

| 37 | 1.4.2 | Remove Existing Cells | 13 days | 7/5/06 | 7/21/06 | Mech.Tech. | 36SS | 38 |

| 38 | 1.4.3 | Remove Cabling | 5 days | 7/31/06 | 8/4/06 | Mech.Tech. | 37,123 | 39 |

| 39 | 1.4.4 | Remove Enclosures & Shield Block | 0.5 days | 8/7/06 | 8/7/06 | Riggers[3] | 38 | 125,40 |

| 40 | 1.4.5 | FPD++ Removal Complete | 0 days | 8/7/06 | 8/7/06 | 39 | 95 | |

| 41 | 1.5 | East FTPC Maintenance | 15 days | 8/7/06 | 8/25/06 | |||

| 42 | 1.5.1 | Remove East IFC Air Manifolds | 0.5 days | 8/7/06 | 8/7/06 | Mech.Tech. | 67,123 | 43 |

| 43 | 1.5.2 | Remove East Scaffold | 0.5 days | 8/7/06 | 8/7/06 | Carpenter[2] | 42 | 44 |

| 44 | 1.5.3 | Rig East FTPC Platform | 0.5 days | 8/8/06 | 8/8/06 | Riggers[3] | 43 | 45SS |

| 45 | 1.5.4 | Install East FTPC Platform | 0.5 days | 8/8/06 | 8/8/06 | Carpenter[2] | 44SS | 46 |

| 46 | 1.5.5 | Install East FTPC Rails | 0.5 days | 8/8/06 | 8/8/06 | Mech.Tech.[2] | 45 | 47 |

| 47 | 1.5.6 | Remove East FTPC | 1 day | 8/9/06 | 8/9/06 | Mech.Tech.[2] | 46 | 48,74 |

| 48 | 1.5.7 | Replace East FTPC FEE | 5 days | 8/10/06 | 8/16/06 | Electronic Tech | 47 | 49 |

| 49 | 1.5.8 | Install FTPC on Rails & Test | 5 days | 8/17/06 | 8/23/06 | 48 | 50FF | |

| 50 | 1.5.9 | Install East FTPC | 0.5 days | 8/24/06 | 8/24/06 | Mech.Tech.[2] | 49FF,75 | 51 |

| 51 | 1.5.10 | Remove East FTPC Rails | 0.5 days | 8/24/06 | 8/24/06 | Mech.Tech.[2] | 50 | 52 |

| 52 | 1.5.11 | Rig East FTPC Platform | 0.5 days | 8/25/06 | 8/25/06 | Riggers[3] | 51 | 53SS |

| 53 | 1.5.12 | Remove East FTPC Platform | 0.5 days | 8/25/06 | 8/25/06 | Carpenter[2] | 52SS | 54 |

| 54 | 1.5.13 | Install East Scaffold | 0.5 days | 8/25/06 | 8/25/06 | Carpenter[2] | 53 | 55 |

| 55 | 1.5.14 | FTPC Maintenance Complete | 0 days | 8/25/06 | 8/25/06 | 54 | 86,70 | |

| 56 | 1.6 | SSD Maintenance | 27.25 days | 7/12/06 | 8/18/06 | |||

| 57 | 1.6.1 | Compressor Maintenance | 5 days | 7/12/06 | 7/19/06 | 24 | ||

| 58 | 1.6.2 | RDO maintenance | 5 days | 7/31/06 | 8/4/06 | Carpenter[2] | 67SS | 59 |

| 59 | 1.6.3 | Crate Maintenance | 10 days | 8/7/06 | 8/18/06 | Riggers[3] | 58,123 | 60 |

| 60 | 1.6.4 | SSD Maintenance Complete | 0 days | 8/18/06 | 8/18/06 | 59 | ||

| 61 | 1.7 | BEMC Maintenance | 40 days | 7/31/06 | 9/25/06 | |||

| 62 | 1.7.1 | Remove & Repair Crates | 20 days | 7/31/06 | 8/25/06 | Electronic Tech | 123 | 63 |

| 63 | 1.7.2 | Install PMT Box Remote Power | 20 days | 8/28/06 | 9/25/06 | Electronic Tech[2] | 62 | 64FF |

| 64 | 1.7.3 | PMT Box Maintenance | 20 days | 8/28/06 | 9/25/06 | Elect.Tech. | 63FF | 65 |

| 65 | 1.7.4 | BEMC Maintenance Complete | 0 days | 9/25/06 | 9/25/06 | 64 | 145 | |

| 66 | 1.8 | SVT RDO Box Relocation | 39 days | 7/31/06 | 9/22/06 | |||

| 67 | 1.8.1 | Remove RDO Boxes | 5 days | 7/31/06 | 8/4/06 | Electronic Tech | 29,123 | 68,42,58SS |

| 68 | 1.8.2 | Repair RDO Boxes | 20 days | 8/7/06 | 9/1/06 | Electronic Tech | 67 | 69 |

| 69 | 1.8.3 | Install West RDO Boxes | 2 days | 9/12/06 | 9/13/06 | Electronic Tech | 68,87 | 72,81,70 |

| 70 | 1.8.4 | Install Remote East RDO Boxes | 5 days | 9/14/06 | 9/20/06 | Mech.Tech. | 69,55 | 71 |

| 71 | 1.8.5 | Rout East RDO Cables | 2 days | 9/21/06 | 9/22/06 | Electronic Tech[2] | 70 | 72 |

| 72 | 1.8.6 | SVT RDO Box Relocation Complete | 0 days | 9/22/06 | 9/22/06 | 71,69 | 139,145 | |

| 73 | 1.9 | HFT Prototype Installation | 10 days | 8/10/06 | 8/23/06 | |||

| 74 | 1.9.1 | Install East HFT Prototype | 10 days | 8/10/06 | 8/23/06 | Mech.Tech.[2] | 47 | 75 |

| 75 | 1.9.2 | HFT Prototype Installed | 0 days | 8/23/06 | 8/23/06 | 74 | 50 | |

| 76 | 1.10 | PMD Maintenance | 40 days | 7/31/06 | 9/25/06 | |||

| 77 | 1.10.1 | Replace Supermodules | 30 days | 7/31/06 | 9/11/06 | 123 | 78 | |

| 78 | 1.10.2 | Detector Maintenance | 10 days | 9/12/06 | 9/25/06 | 77 | 79 | |

| 79 | 1.10.3 | PMD Maintenance Complete | 0 days | 9/25/06 | 9/25/06 | 78 | 145 | |

| 80 | 1.11 | EEMC Maintenance | 15 days | 9/14/06 | 10/4/06 | |||

| 81 | 1.11.1 | PMT/MAPMT Box Maintenance | 12 days | 9/14/06 | 9/29/06 | Mech.Tech.[2] | 69,112 | 82SS |

| 82 | 1.11.2 | Subsystem Testing | 15 days | 9/14/06 | 10/4/06 | 81SS | 83 | |

| 83 | 1.11.3 | EEMC Maintenance Complete | 0 days | 10/4/06 | 10/4/06 | 82 | 145,115 | |

| 84 | 1.12 | TPC Maintenance | 35.75 days | 7/21/06 | 9/11/06 | |||

| 85 | 1.12.1 | Remove East/West Bad FEE/RDO | 5 days | 7/21/06 | 7/28/06 | Electronic Tech[2] | 29 | 88 |

| 86 | 1.12.2 | Install East FEE/RDO | 5 days | 8/28/06 | 9/1/06 | Electronic Tech[2] | 55 | 89,87 |

| 87 | 1.12.3 | Install West FEE/RDO | 5 days | 9/5/06 | 9/11/06 | Electronic Tech[2] | 86 | 89,69 |

| 88 | 1.12.4 | Maintenance on Gas Room UPS | 1 day | 7/28/06 | 7/31/06 | 85 | 89 | |

| 89 | 1.12.5 | TPC Maintenance Complete | 0 days | 9/11/06 | 9/11/06 | 88,87,86 | 139,145,107 | |

| 90 | 1.13 | TOF Maintenance | 5 days | 10/5/06 | 10/11/06 | |||

| 91 | 1.13.1 | Remove Old Tray | 2 days | 10/5/06 | 10/6/06 | Mech.Tech. | 92 | |

| 92 | 1.13.2 | Install New Trays | 3 days | 10/9/06 | 10/11/06 | Mech.Tech. | 91 | 93 |

| 93 | 1.13.3 | TOF Installation Complete | 0 days | 10/11/06 | 10/11/06 | 92 | 115 | |

| 94 | 1.14 | FMS Installation | 43 days | 8/7/06 | 10/6/06 | |||

| 95 | 1.14.1 | Install FMS Carriages | 5 days | 8/7/06 | 8/14/06 | Mech.Tech.[2] | 40 | 96 |

| 96 | 1.14.2 | Install FMS Cells | 30 days | 8/14/06 | 9/26/06 | Mech.Tech.[2] | 95 | 98FF,97SS |

| 97 | 1.14.3 | Install AC Power | 5 days | 8/14/06 | 8/21/06 | Electrician[2] | 96SS | 100 |

| 98 | 1.14.4 | Install FMS Enclosures | 5 days | 9/19/06 | 9/26/06 | Mech.Tech.[2] | 96FF | 99 |

| 99 | 1.14.5 | Install FMS Cabling | 8 days | 9/26/06 | 10/6/06 | Mech.Tech.[2] | 98 | 101 |

| 100 | 1.14.6 | Install Electronics Racks | 10 days | 8/21/06 | 9/5/06 | Electronic Tech[2] | 97 | 101 |

| 101 | 1.14.7 | FMS Installation Complete | 0 days | 10/6/06 | 10/6/06 | 99,100 | 145 | |

| 102 | 1.15 | BBC Maintenance | 25 days | 7/6/06 | 8/10/06 | |||

| 103 | 1.15.1 | Subsystem Maintenance | 20 days | 7/6/06 | 8/3/06 | 5,9 | 104 | |

| 104 | 1.15.2 | Detector Commisioning | 5 days | 8/3/06 | 8/10/06 | 103 | 105 | |

| 105 | 1.15.3 | BBC Maintenance Complete | 0 days | 8/10/06 | 8/10/06 | 104 | ||

| 106 | 1.16 | Trigger Maintenance | 10 days | 9/12/06 | 9/25/06 | |||

| 107 | 1.16.1 | Trigger Maintenance | 10 days | 9/12/06 | 9/25/06 | 89 | 108 | |

| 108 | 1.16.2 | Trigger Maintenance Complete | 0 days | 9/25/06 | 9/25/06 | 107 | 145 | |

| 109 | 1.17 | Pole Tip Hose Replacement | 5 days | 7/31/06 | 8/4/06 | |||

| 110 | 1.17.1 | Remove Old Hose | 2 days | 7/31/06 | 8/1/06 | Mech.Tech.[2] | 123 | 111 |

| 111 | 1.17.2 | Install New Braided Hose | 3 days | 8/2/06 | 8/4/06 | Mech.Tech.[2] | 110 | 112 |

| 112 | 1.17.3 | Hose Replacement Complete | 0 days | 8/4/06 | 8/4/06 | 111 | 81 | |

| 113 | 1.18 | Magnet Power Supply Maintenance & Test | 87 days | 6/26/06 | 10/27/06 | |||

| 114 | 1.18.1 | Power Supply Maintenance & Test | 5 days | 6/26/06 | 6/30/06 | Electronic Tech[2] | 3SS | 5,36 |

| 115 | 1.18.2 | Remove East/West Scaffold | 0.5 days | 10/12/06 | 10/12/06 | Carpenter[2] | 83,93 | 116SS |

| 116 | 1.18.3 | Install East Pole Tip | 1 day | 10/12/06 | 10/12/06 | Mech.Tech.[2] | 115SS | 117 |

| 117 | 1.18.4 | Install West Pole Tip | 1 day | 10/13/06 | 10/13/06 | Mech.Tech.[2] | 116 | 118,143FF |

| 118 | 1.18.5 | Power Supply Maintenance & Test | 10 days | 10/16/06 | 10/27/06 | Electronic Tech[2] | 117 | 119 |

| 119 | 1.18.6 | Power Supply Maintenance Complete | 0 days | 10/27/06 | 10/27/06 | 118 | 146 | |

| 120 | 1.19 | C-AD Maintenance | 49 days | 7/24/06 | 9/29/06 | |||

| 121 | 1.19.1 | SGIS Modifications | 10 days | 9/18/06 | 9/29/06 | Elect.Tech.[2] | 130,144 | |

| 122 | 1.19.2 | PS Sound Barrier Wall | 10 days | 7/31/06 | 8/11/06 | 123 | ||

| 123 | 1.19.3 | AC Substation Maintenance | 5 days | 7/24/06 | 7/28/06 | 62,110,124,128,77,42,59,31,67,38,132,122 | ||

| 124 | 1.19.4 | Control Room Modification | 10 days | 7/31/06 | 8/11/06 | 123 | 126 | |

| 125 | 1.19.5 | Install West Tunnel Shielding | 5 days | 8/7/06 | 8/14/06 | Riggers[3] | 39 | |

| 126 | 1.19.6 | DAQ Room A/C Upgrade | 10 days | 8/14/06 | 8/25/06 | 124 | 127 | |

| 127 | 1.19.7 | Fire Sprinkler Preaction Upgrade | 10 days | 8/28/06 | 9/11/06 | 126 | 130 | |

| 128 | 1.19.8 | MCW Flow Balance | 10 days | 7/31/06 | 8/11/06 | Mech.Tech.[2] | 123 | 129 |

| 129 | 1.19.9 | Seal Tower Basin | 5 days | 8/14/06 | 8/18/06 | Mech.Tech.[2] | 128 | |

| 130 | 1.19.10 | C-A Maintenance Complete | 0 days | 9/29/06 | 9/29/06 | 127,121 | ||

| 131 | 1.20 | Connect Detector Utilities | 64 days | 7/31/06 | 10/27/06 | |||

| 132 | 1.20.1 | Connect Magnet LCW | 1 day | 7/31/06 | 7/31/06 | Mech.Tech.[2] | 29,123 | 133 |

| 133 | 1.20.2 | Install Buss Bridge | 0.5 days | 8/1/06 | 8/1/06 | Mech.Tech.[2] | 132 | 134,137 |

| 134 | 1.20.3 | Connect Electrical Power | 1 day | 8/1/06 | 8/2/06 | Electrician[2] | 133 | 135,136 |

| 135 | 1.20.4 | Connect Magnet Power | 1 day | 8/2/06 | 8/3/06 | Elect.Tech.[2] | 134 | |

| 136 | 1.20.5 | Connect All Subsystem Utilities | 1 day | 8/2/06 | 8/3/06 | Mech.Tech.[2] | 134 | |

| 137 | 1.20.6 | Connect Platform MCWS | 0.5 days | 8/1/06 | 8/1/06 | Mech.Tech. | 133 | 138 |

| 138 | 1.20.7 | Connect South Platform Stairs | 1 day | 8/2/06 | 8/2/06 | Mech.Tech.[2] | 137 | |

| 139 | 1.20.8 | Connect Vacuum Pipe & Supports | 3 days | 9/25/06 | 9/27/06 | Mech.Tech.[2] | 72,89 | 140SS |

| 140 | 1.20.9 | Vacuum Pipe Bake-out | 10 days | 9/25/06 | 10/6/06 | Mech.Tech.[2] | 139SS | 141 |

| 141 | 1.20.10 | Install START Detectors | 0.5 days | 10/9/06 | 10/9/06 | Mech.Tech. | 140 | 142 |

| 142 | 1.20.11 | Survey Beam Pipe | 2 days | 10/9/06 | 10/11/06 | Surveyors[2] | 141 | 143 |

| 143 | 1.20.12 | Install BBC Detectors | 1 day | 10/13/06 | 10/13/06 | Mech.Tech.[2] | 142,117FF | |

| 144 | 1.20.13 | Detector Safety Certification | 5 days | 10/2/06 | 10/6/06 | 121 | 145 | |

| 145 | 1.20.14 | Subsystems testing in WAH | 5 days | 10/9/06 | 10/13/06 | 144,65,108,101,72,79,83,89 | 146 | |

| 146 | 1.20.15 | Shutdown Activities Complete | 0 days | 10/27/06 | 10/27/06 | 145,119 |

Users area

STAR User's home page area.

Bedagadas Mohanty

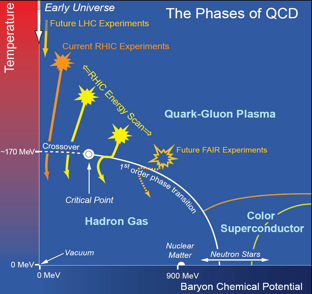

Figures from STAR publications

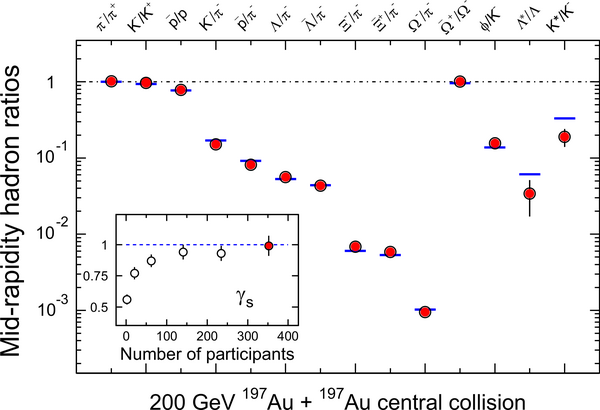

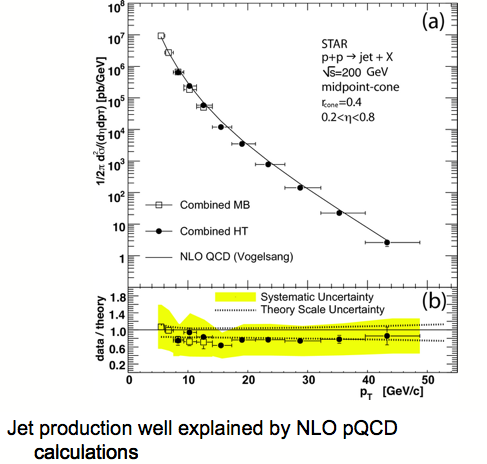

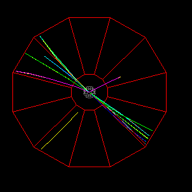

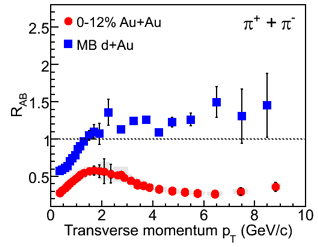

Baryon to Meson ratio v2 vs. Beam energy low pT spectra particle ratio

Jet Typical dijet-event Raa/dau

Theory Figures

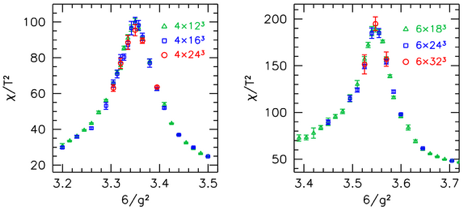

Cross-over Phasediagram

genevb: miscellaneous working plots

________________________________________________________________________________________________________________________

hello :::0 there.

_____________

:::0YYY ZZZ AAACCCDDD

Misc1

Misc2

misc3

Web master

The STAR www site is divided into a few parts, most will converge to a unique Drupal based content management system as time will unfold.

- The front pages are written primarily with PHP, a scripting language that is ideally suited for web development due to its cross-platform portability, an approachable syntax, and its broad feature set. PHP also has built-in support for mySQL database tables, and much of the site's content (collaboration, publications, meetings, physics results, etc...) is created dynamically from PHP-based mySQL queries. Those pages and the back-end database design were developed by Daniel Magestro.

- Many documents are ported into the Drupal content management system, a modular platform and a free software package that allows an individual or a community of users to easily publish, manage and organize a wide variety of content on a website. Drupal will become our main content repository - STAR's Drupal implementation include a database back-end, secondary services and roll-back capabilities. Several of the initial home-maintained PHP modules have been ported to Drupal, thanks to Zbigniew Chajecki.

- Some of our documents are still (for historical reasons) in AFS. Those areas are being phased out.

As a Collaboration, STAR makes use of the web for much of its daily functioning: communication, documentation, organization and many other activities. In addition to being a tool for the Collaboration, the web also serves as a means for introducing our experiment, sharing our enthusiasm, and conveying our results to the rest of the interested world. This includes both scientists and non-scientists alike.

For more information about this www site, contact the appropriate person below.

Click here to contribute news items and meeting announcements or a general comment.

| Andrew Tamis| David Stewart| |

Central site design & maintenance PHP scripting & support mySQL database design STAR publications |

| Jerome Lauret | | Server configuration & support Hypernews mailing lists Drupal site config |

| Liz Mogavero | | Collaboration support Meetings & events coordination |